Because the US strikes towards criminalizing deepfakes—misleading AI-generated audio, pictures, and movies which can be more and more onerous to discern from genuine content material on-line—tech firms have rushed to roll out instruments to assist everybody higher detect AI content material.

However efforts thus far have been imperfect, and consultants worry that social media platforms will not be able to deal with the following AI chaos throughout main international elections in 2024—regardless of tech giants committing to creating instruments particularly to fight AI-fueled election disinformation. The perfect AI detection stays observant people, who, by paying shut consideration to deepfakes, can choose up on flaws like AI-generated individuals with further fingers or AI voices that talk with out pausing for a breath.

Among the many splashiest instruments introduced this week, OpenAI shared particulars immediately a few new AI picture detection classifier that it claims can detect about 98 % of AI outputs from its personal subtle picture generator, DALL-E 3. It additionally “presently flags roughly 5 to 10 % of pictures generated by different AI fashions,” OpenAI’s weblog mentioned.

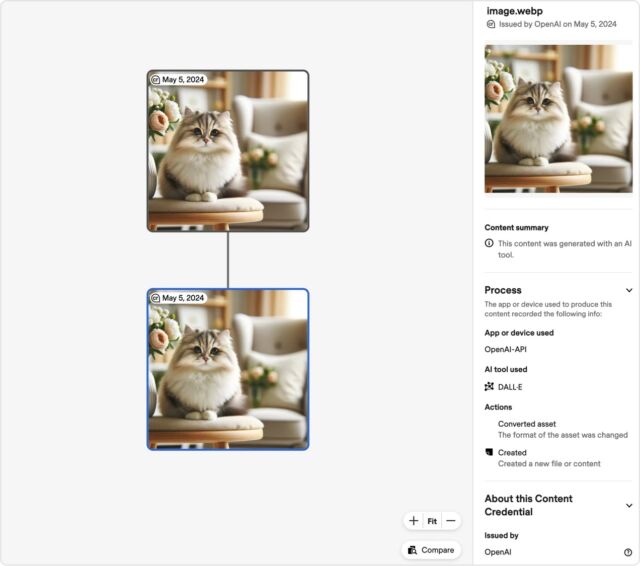

In accordance with OpenAI, the classifier offers a binary “true/false” response “indicating the chance of the picture being AI-generated by DALL·E 3.” A screenshot of the device reveals the way it may also be used to show a simple content material abstract confirming that “this content material was generated with an AI device,” in addition to consists of fields ideally flagging the “app or gadget” and AI device used.

To develop the device, OpenAI spent months including tamper-resistant metadata to “all pictures created and edited by DALL·E 3” that “can be utilized to show the content material comes” from “a selected supply.” The detector reads this metadata to precisely flag DALL-E 3 pictures as faux.

That metadata follows “a extensively used commonplace for digital content material certification” set by the Coalition for Content material Provenance and Authenticity (C2PA), typically likened to a vitamin label. And reinforcing that commonplace has grow to be “an vital side” of OpenAI’s method to AI detection past DALL-E 3, OpenAI mentioned. When OpenAI broadly launches its video generator Sora, C2PA metadata will likely be built-in into that device as nicely, OpenAI mentioned.

After all, this resolution is just not complete as a result of that metadata may all the time be eliminated, and “individuals can nonetheless create misleading content material with out this info (or can take away it),” OpenAI mentioned, “however they can not simply faux or alter this info, making it an vital useful resource to construct belief.”

As a result of OpenAI is all in on C2PA, the AI chief introduced immediately that it will be part of the C2PA steering committee to assist drive broader adoption of the usual. OpenAI may also launch a $2 million fund with Microsoft to help broader “AI training and understanding,” seemingly partly within the hopes that the extra individuals perceive concerning the significance of AI detection, the much less possible they are going to be to take away this metadata.

“As adoption of the usual will increase, this info can accompany content material by means of its lifecycle of sharing, modification, and reuse,” OpenAI mentioned. “Over time, we imagine this type of metadata will likely be one thing individuals come to anticipate, filling an important hole in digital content material authenticity practices.”

OpenAI becoming a member of the committee “marks a major milestone for the C2PA and can assist advance the coalition’s mission to extend transparency round digital media as AI-generated content material turns into extra prevalent,” C2PA mentioned in a weblog.