Photos created by Microsoft’s Copilot

Whereas AI is underneath assault from copying present works with out permission, the trade might find yourself moving into extra authorized bother over logos.

The rise in curiosity in generative AI has additionally led to a rise in complaints concerning the know-how. Together with the complaints that the AI can typically be incorrect, there are sometimes points with the sourcing of content material to coach the fashions within the first place.

This has already induced some litigious motion, similar to Conde Nast sending a stop and desist to AI startup Perplexity from utilizing content material from its publications.

There are some cases the place the businesses producing AI are doing the best factor. For instance, Apple has provided to pay publishers for entry for coaching functions.

Nonetheless, there could also be a much bigger drawback on the horizon, particularly for image-based generative AI, one which’s past deep fakes. The problem of logos and product designs.

Extremely protected

Main corporations are very protecting of their logos, copyrights, and mental property and can go to nice lengths to maintain them protected. They can even put a number of effort into sending attorneys after individuals infringing on their properties, with a view to securing a hefty monetary payoff in some conditions.

Since generative AI providers that create pictures are sometimes educated on images of thousands and thousands of things, it is smart that also they are conscious of the existence of product and firm logos, product names, and product designs.

Cookie Monster consuming a Chrome Cookie, generated by Copilot

The issue is that it now leaves those that generate pictures by means of providers open to authorized motion if their pictures include designs and parts which can be too intently based mostly on present logos or merchandise.

In lots of circumstances, industrial generative AI picture providers do act to guard themselves and customers from being subjected to lawsuits, by together with guidelines the fashions observe. These guidelines usually embody lists of things or actions that the fashions won’t generate.

Nonetheless, this isn’t all the time the case, and it isn’t all the time utilized evenly throughout the board.

Monsters, mice, and flimsy guidelines

On Tuesday, in a bid to attempt to create pictures for a cookie-based information story, one AppleInsider editorial workforce member puzzled if one thing might be made for the article in AI. An offhand request was made to generate a picture of “Cookie Monster eating Google Chrome icons like they are cookies.”

Surprisingly, Microsoft Copilot generated a picture of simply that, with an in depth image of the Sesame Road character about to devour a cookie bearing the Chrome icon.

AppleInsider did not use the picture within the article, nevertheless it did increase questions on how a lot authorized bother an individual might get into utilizing generative AI pictures.

A check was made in opposition to ChatGPT 4 for a similar Cookie Monster/Chrome request, and that got here out with an analogous end result.

-xl.jpg)

Adobe Firefly rejecting trademark-based queries

Adobe Firefly provided a distinct end result altogether in that it ignored each the Google Chrome and Cookie Monster parts. As a substitute, it created monsters consuming cookies, a literal monster constructed from cookies, and a cat consuming a cookie.

Extra importantly, it displayed a message warning “One or more words may not meet User Guidelines and were removed.” These pointers, accessible through a hyperlink, has a large part titled “Be Respectful of Third-Party Rights.”

The textual content principally says that customers mustn’t violate third-party copyrights, logos, and different rights. Evidently, Adobe additionally proactively checks the prompts for potential rule violations earlier than producing pictures.

We then tried to make use of the identical providers to create pictures based mostly on entities backed by extra litigious and protecting corporations: Apple and Disney.

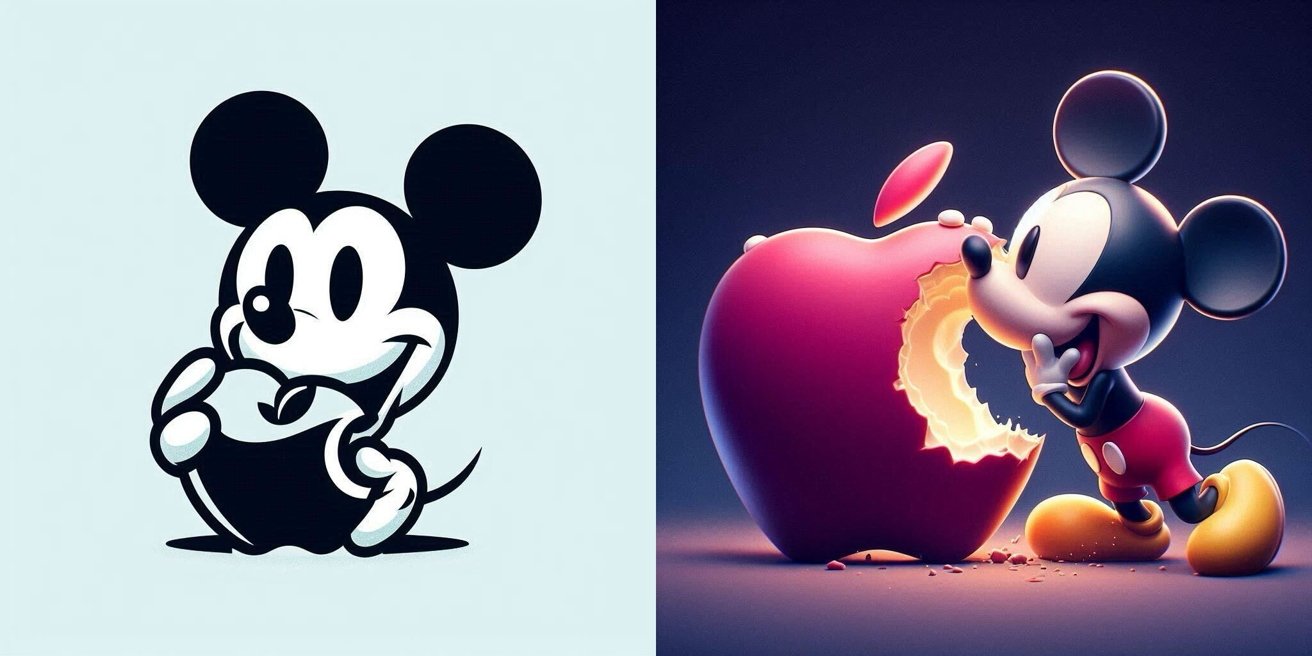

To every of Copilot, Firefly, and ChatGPT 4, we fed the immediate of “Mickey Mouse taking a bit out of an Apple logo.”

Firefly once more declined to proceed with the immediate, however so did ChatGPT 4. Evidently, OpenAI is eager to play it protected and never rile both of the businesses in any respect.

Two pictures of Mickey Mouse consuming the Apple emblem, generated by Copilot

However then, Microsoft’s Copilot determined to create the photographs. The primary was a reasonably stylized black-and-white effort, whereas the second appeared extra like somebody at Pixar had created the picture.

It appeared that, whereas some providers are eager to keep away from any authorized wrangling from well-heeled opponents, Microsoft is extra open to persevering with with out concern of repercussion.

Believable merchandise

AppleInsider additionally tried producing pictures of iPhones utilizing precise mannequin names. It is evident that Copilot is aware of what an iPhone is, however its designs should not fairly updated.

For instance, iPhones generated hold together with the notch, fairly than shifting to the Dynamic Island that newer fashions use.

We had been additionally in a position to generate a picture of an iPhone subsequent to a reasonably comically-sized machine harking back to Samsung Galaxy smartphones. One generated picture even included odd combos of earphones and pens.

Apple merchandise generated by Copilot

Tricking the providers to supply a picture of Tim Cook dinner holding an iPhone did not work. Nonetheless, “Godzilla holding an iPhone” labored fantastic in Copilot.

As for different Apple merchandise, one early end result was an older and thick type of iMac, full with an Apple keyboard and pretty appropriate styling. Nonetheless, for some cause, a hand was utilizing a stylus on the show, which is kind of incorrect.

It appears at the very least that Apple merchandise are pretty protected to attempt to produce utilizing the providers, if solely as a result of they’re based mostly on older designs.

Copilot’s authorized leanings

Whereas a dodgy generative AI picture containing an organization’s emblem or product might be a authorized problem in ready, evidently Microsoft is assured in Copilot’s capabilities to keep away from them.

A Microsoft weblog put up from September 2023 and up to date in Might 2024 mentioned that Microsoft would assist “defend the customer and pay the amount of any adverse judgments or settlements that result from the lawsuit, as long as the customer used the guardrails and content filters we have built into our products.”

It seems that this solely applies to industrial prospects, not client or private customers who might not essentially use the generated pictures for industrial functions.

If Microsoft’s industrial shoppers signed up to make use of the identical AI applied sciences and have the identical pointers as shoppers utilizing the service, this might be a possible authorized nightmare for Microsoft down the street.

Apple Intelligence

Everyone seems to be conscious that Apple Intelligence is on the best way. The gathering of options contains some dealing with textual content, some for queries, however rather a lot for generative AI imagery.

The final part is dominated by Picture Playground, an app that makes use of textual content prompts and suggests extra influences to create pictures. In some functions, the system works on-page, combining topics inside the doc to create a customized picture to fill unoccupied web page house.

As one of many corporations extra inclined to guard its IP and already demonstrating that it needs to be chargeable for the way it makes use of the know-how, Apple Intelligence could also be fairly strict in the way it generates pictures. It might effectively keep away from a lot of the trademark and copyright points others should take care of.

Apple’s keynote instance was of a mom dressed like a generic superhero, not a extra particular character like Surprise Girl. Nonetheless, we can’t actually know the way these instruments work till Apple releases the function to the general public.