Firefly, Adobe’s household of generative AI fashions, doesn’t have the very best popularity amongst creatives.

The Firefly picture technology mannequin particularly has been derided as underwhelming and flawed in comparison with Midjourney, OpenAI’s DALL-E 3, and different rivals, with a bent to distort limbs and landscapes and miss the nuances in prompts. However Adobe is making an attempt to proper the ship with its third-generation mannequin, Firefly Picture 3, releasing this week throughout the firm’s Max London convention.

The mannequin, now accessible in Photoshop (beta) and Adobe’s Firefly internet app, produces extra “reasonable” imagery than its predecessor (Picture 2) and its predecessor’s predecessor (Picture 1) because of a capability to grasp longer, extra advanced prompts and scenes in addition to improved lighting and textual content technology capabilities. It ought to extra precisely render issues like typography, iconography, raster photos and line artwork, says Adobe, and is “considerably” more proficient at depicting dense crowds and other people with “detailed options” and “a wide range of moods and expressions.”

For what it’s price, in my transient unscientific testing, Picture 3 does seem like a step up from Picture 2.

I wasn’t capable of strive Picture 3 myself. However Adobe PR despatched just a few outputs and prompts from the mannequin, and I managed to run those self same prompts by way of Picture 2 on the net to get samples to check the Picture 3 outputs with. (Remember that the Picture 3 outputs might’ve been cherry-picked.)

Discover the lighting on this headshot from Picture 3 in comparison with the one beneath it, from Picture 2:

From Picture 3. Immediate: “Studio portrait of younger girl.”

Similar immediate as above, from Picture 2.

The Picture 3 output seems extra detailed and lifelike to my eyes, with shadowing and distinction that’s largely absent from the Picture 2 pattern.

Right here’s a set of photos displaying Picture 3’s scene understanding at play:

From Picture 3. Immediate: “An artist in her studio sitting at desk trying pensive with tons of work and ethereal.”

Similar immediate as above. From Picture 2.

Word the Picture 2 pattern is pretty primary in comparison with the output from Picture 3 by way of the extent of element — and total expressiveness. There’s wonkiness occurring with the topic within the Picture 3 pattern’s shirt (across the waist space), however the pose is extra advanced than the topic’s from Picture 2. (And Picture 2’s garments are additionally a bit off.)

A few of Picture 3’s enhancements can little question be traced to a bigger and extra various coaching knowledge set.

Like Picture 2 and Picture 1, Picture 3 is educated on uploads to Adobe Inventory, Adobe’s royalty-free media library, together with licensed and public area content material for which the copyright has expired. Adobe Inventory grows on a regular basis, and consequently so too does the accessible coaching knowledge set.

In an effort to beat back lawsuits and place itself as a extra “moral” various to generative AI distributors who practice on photos indiscriminately (e.g. OpenAI, Midjourney), Adobe has a program to pay Adobe Inventory contributors to the coaching knowledge set. (We’ll observe that the phrases of this system are somewhat opaque, although.) Controversially, Adobe additionally trains Firefly fashions on AI-generated photos, which some contemplate a type of knowledge laundering.

Latest Bloomberg reporting revealed AI-generated photos in Adobe Inventory aren’t excluded from Firefly image-generating fashions’ coaching knowledge, a troubling prospect contemplating these photos may comprise regurgitated copyrighted materials. Adobe has defended the apply, claiming that AI-generated photos make up solely a small portion of its coaching knowledge and undergo a moderation course of to make sure they don’t depict logos or recognizable characters or reference artists’ names.

In fact, neither various, extra “ethically” sourced coaching knowledge nor content material filters and different safeguards assure a wonderfully flaw-free expertise — see customers producing folks flipping the chicken with Picture 2. The true take a look at of Picture 3 will come as soon as the group will get its fingers on it.

New AI-powered options

Picture 3 powers a number of new options in Photoshop past enhanced text-to-image.

A brand new “type engine” in Picture 3, together with a brand new auto-stylization toggle, permits the mannequin to generate a wider array of colours, backgrounds and topic poses. They feed into Reference Picture, an choice that lets customers situation the mannequin on a picture whose colours or tone they need their future generated content material to align with.

Three new generative instruments — Generate Background, Generate Related and Improve Element — leverage Picture 3 to carry out precision edits on photos. The (self-descriptive) Generate Background replaces a background with a generated one which blends into the prevailing picture, whereas Generate Related presents variations on a specific portion of a photograph (an individual or an object, for instance). As for Improve Element, it “fine-tunes” photos to enhance sharpness and readability.

If these options sound acquainted, that’s as a result of they’ve been in beta within the Firefly internet app for a minimum of a month (and Midjourney for for much longer than that). This marks their Photoshop debut — in beta.

Talking of the net app, Adobe isn’t neglecting this alternate path to its AI instruments.

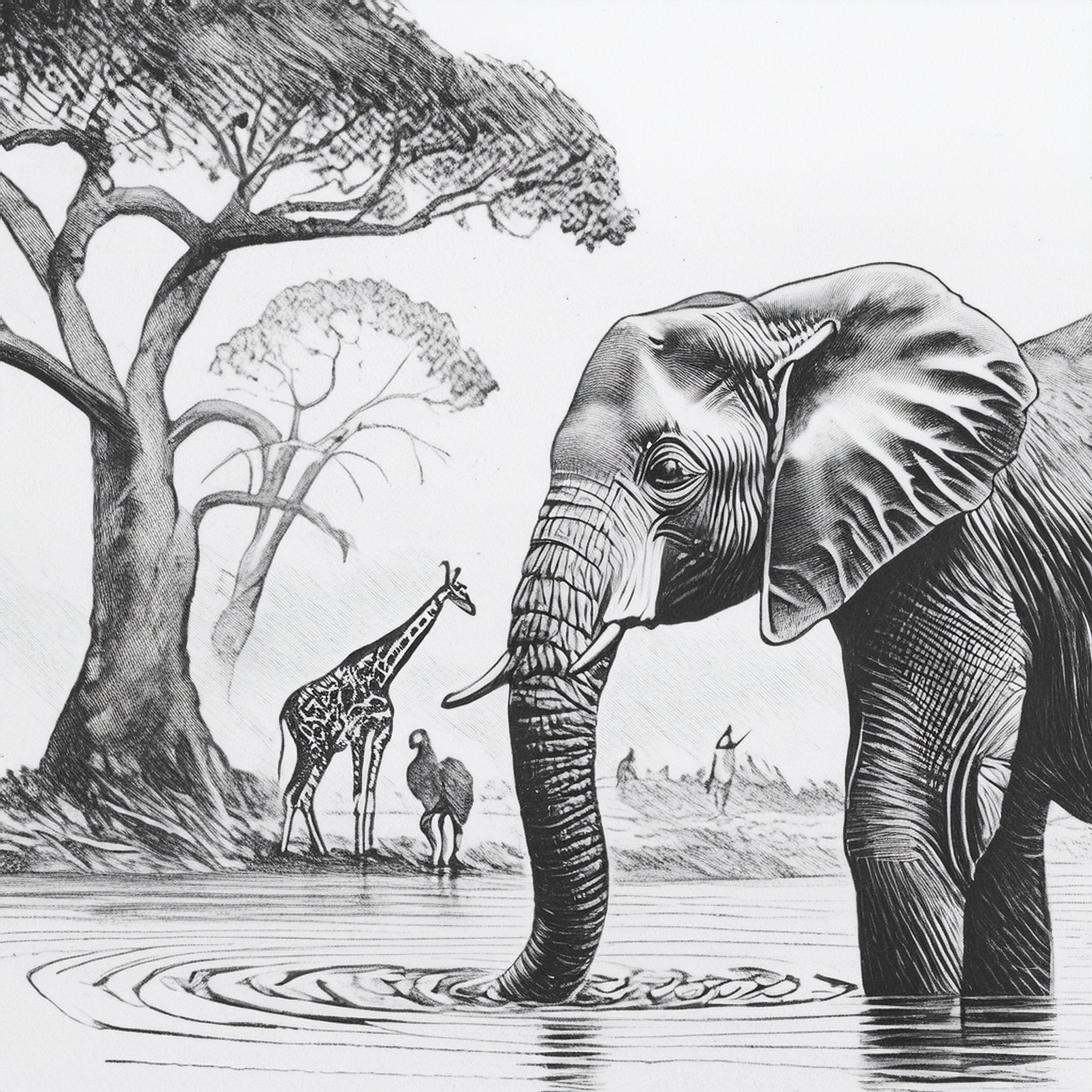

To coincide with the discharge of Picture 3, the Firefly internet app is getting Construction Reference and Model Reference, which Adobe’s pitching as new methods to “advance artistic management.” (Each have been introduced in March, however they’re now turning into broadly accessible.) With Construction Reference, customers can generate new photos that match the “construction” of a reference picture — say, a head-on view of a race automotive. Model Reference is basically type switch by one other title, preserving the content material of a picture (e.g. elephants within the African Safari) whereas mimicking the type (e.g. pencil sketch) of a goal picture.

Right here’s Construction Reference in motion:

Unique picture.

Remodeled with Construction Reference.

And Model Reference:

Unique picture.

Remodeled with Model Reference.

I requested Adobe if, with all of the upgrades, Firefly picture technology pricing would change. At the moment, the most affordable Firefly premium plan is $4.99 monthly — undercutting competitors like Midjourney ($10 monthly) and OpenAI (which gates DALL-E 3 behind a $20-per-month ChatGPT Plus subscription).

Adobe stated that its present tiers will stay in place for now, together with its generative credit score system. It additionally stated that its indemnity coverage, which states Adobe can pay copyright claims associated to works generated in Firefly, received’t be altering both, nor will its method to watermarking AI-generated content material. Content material Credentials — metadata to establish AI-generated media — will proceed to be mechanically connected to all Firefly picture generations on the net and in Photoshop, whether or not generated from scratch or partially edited utilizing generative options.