Though the race to energy the huge ambitions of AI corporations would possibly appear to be it’s all about Nvidia, there’s a actual competitors moving into AI accelerator chips. The most recent instance: At

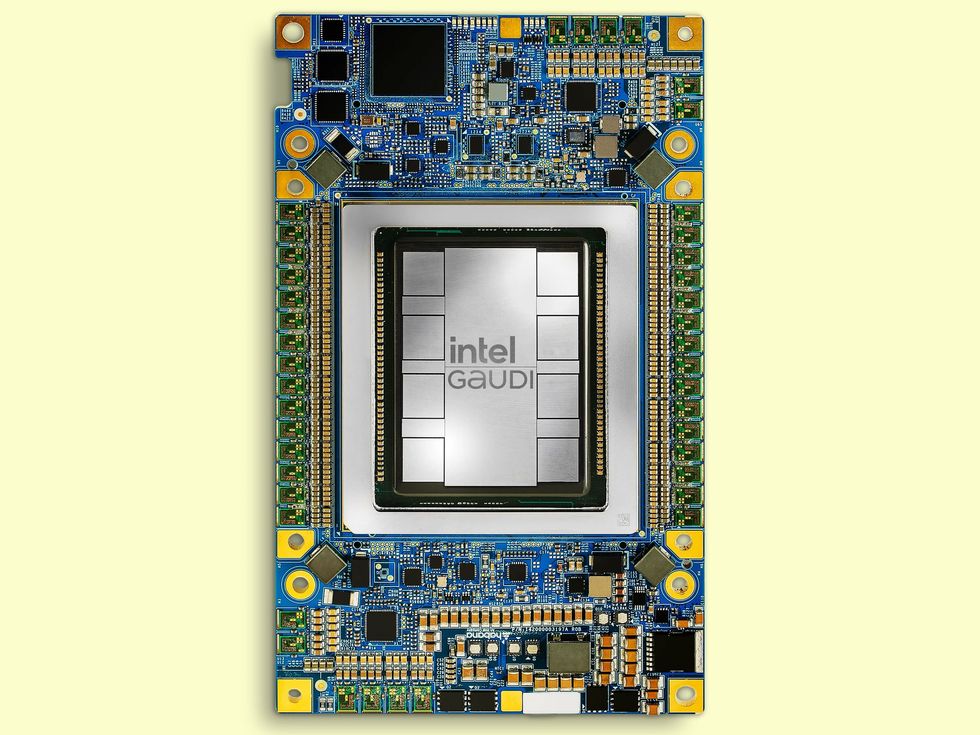

Intel’s Imaginative and prescient 2024 occasion this week in Phoenix, Ariz., the corporate gave the primary architectural particulars of its third-generation AI accelerator, Gaudi 3.

With the predecessor chip, the corporate had touted how near parity its efficiency was to Nvidia’s high chip of the time, H100, and claimed a superior ratio of value versus efficiency. With Gaudi 3, it’s pointing to large-language-model (LLM) efficiency the place it will possibly declare outright superiority. However, looming within the background is Nvidia’s subsequent GPU, the Blackwell B200, anticipated to reach later this 12 months.

Gaudi Structure Evolution

Gaudi 3 doubles down on its predecessor Gaudi 2’s structure, actually in some instances. As a substitute of Gaudi 2’s single chip, Gaudi 3 is made up of two an identical silicon dies joined by a high-bandwidth connection. Every has a central area of 48 megabytes of cache reminiscence. Surrounding which are the chip’s AI workforce—4 engines for matrix multiplication and 32 programmable items known as tensor processor cores. All that’s surrounded by connections to reminiscence and capped with media processing and community infrastructure at one finish.

Intel says that each one that mixes to provide double the AI compute of Gaudi 2 utilizing 8-bit floating-point infrastructure that has emerged as

key to coaching transformer fashions. It additionally offers a fourfold increase for computations utilizing the BFloat 16 quantity format.

Gaudi 3 LLM Efficiency

Intel tasks a 40 % sooner coaching time for the GPT-3 175B massive language mannequin versus the H100 and even higher outcomes for the 7-billion and 8-billion parameter variations of Llama2.

For inferencing, the competition was a lot nearer, based on Intel, the place the brand new chip delivered 95 to 170 % of the efficiency of H100 for 2 variations of Llama. Although for the Falcon 180B mannequin, Gaudi 3 achieved as a lot as a fourfold benefit. Unsurprisingly, the benefit was smaller in opposition to the Nvidia H200—80 to 110 % for Llama and three.8x for Falcon.

Intel claims extra dramatic outcomes when measuring energy effectivity, the place it tasks as a lot as 220 % H100’s worth on Llama and 230 % on Falcon.

“Our clients are telling us that what they discover limiting is getting sufficient energy to the info middle,” says Intel’s Habana Labs chief working officer Eitan Medina.

The energy-efficiency outcomes have been finest when the LLMs have been tasked with delivering an extended output. Medina places that benefit right down to the Gaudi structure’s large-matrix math engines. These are 512 bits throughout. Different architectures use many smaller engines to carry out the identical calculation, however Gaudi’s supersize model “wants virtually an order of magnitude much less reminiscence bandwidth to feed it,” he says.

Gaudi 3 Versus Blackwell

It’s hypothesis to check accelerators earlier than they’re in hand, however there are a few information factors to check, explicit in reminiscence and reminiscence bandwidth. Reminiscence has all the time been necessary in AI, and as generative AI has taken maintain and widespread fashions attain the tens of billions of parameters in measurement it’s develop into much more crucial.

Each make use of high-bandwidth reminiscence (HBM), which is a stack of DRAM reminiscence dies atop a management chip. In high-end accelerators, it sits inside the identical bundle because the logic silicon, surrounding it on a minimum of two sides. Chipmakers use superior packaging, akin to Intel’s EMIB silicon bridges or TSMC’s chip-on-wafer-on-silicon (CoWoS), to supply a high-bandwidth path between the logic and reminiscence.

Because the chart reveals, Gaudi 3 has extra HBM than H100, however lower than H200, B200, or AMD’s MI300. It’s reminiscence bandwidth can be superior to H100’s. Probably of significance to Gaudi’s value competitiveness, it makes use of the cheaper HBM2e versus the others’ HBM3 or HBM3e, that are regarded as a

vital fraction of the tens of hundreds of {dollars} the accelerators reportedly promote for.

Another level of comparability is that Gaudi 3 is made utilizing

TSMC’s N5 (generally known as 5-nanometer) course of expertise. Intel has mainly been a course of node behind Nvidia for generations of Gaudi, so it’s been caught evaluating its newest chip to 1 that was a minimum of one rung greater on the Moore’s Legislation ladder. With Gaudi 3, that a part of the race is narrowing barely. The brand new chip makes use of the identical course of as H100 and H200. What’s extra, as a substitute of transferring to 3-nm expertise, the approaching competitor Blackwell is completed on a course of known as N4P. TSMC describes N4P as being in the identical 5-nm household as N5 however delivering an 11 % efficiency increase, 22 % higher effectivity, and 6 % greater density.

By way of Moore’s Legislation, the large query is what expertise the subsequent era of Gaudi, at present code-named Falcon Shores, will use. Thus far the product has relied on TSMC expertise whereas Intel will get its foundry enterprise up and working. However subsequent 12 months Intel will start providing its

18A expertise to foundry clients and can already be utilizing 20A internally. These two nodes carry the subsequent era of transistor expertise, nanosheets, with bottom energy supply, a mix TSMC will not be planning till 2026.