Generative AI stretches our present copyright legislation in unexpected and uncomfortable methods. Within the US, the Copyright Workplace has issued steering stating that the output of image-generating AI isn’t copyrightable until human creativity has gone into the prompts that generated the output. This ruling in itself raises many questions: How a lot creativity is required, and is that the identical type of creativity that an artist workouts with a paintbrush? If a human writes software program to generate prompts that in flip generate a picture, is that copyrightable? If the output of a mannequin can’t be owned by a human, who (or what) is accountable if that output infringes current copyright? Is an artist’s type copyrightable, and in that case, what does that imply?

One other group of circumstances involving textual content (usually novels and novelists) argue that utilizing copyrighted texts as a part of the coaching knowledge for a big language mannequin (LLM) is itself copyright infringement,1 even when the mannequin by no means reproduces these texts as a part of its output. However studying texts has been a part of the human studying course of so long as studying has existed, and whereas we pay to purchase books, we don’t pay to be taught from them. These circumstances typically level out that the texts utilized in coaching have been acquired from pirated sources—which makes for good press, though that declare has no authorized worth. Copyright legislation says nothing about whether or not texts are acquired legally or illegally.

How will we make sense of this? What ought to copyright legislation imply within the age of synthetic intelligence?

In an article in The New Yorker, Jaron Lanier introduces the thought of knowledge dignity, which implicitly distinguishes between coaching a mannequin and producing output utilizing a mannequin. Coaching an LLM means educating it the best way to perceive and reproduce human language. (The phrase “educating” arguably invests an excessive amount of humanity into what continues to be software program and silicon.) Producing output means what it says: offering the mannequin directions that trigger it to supply one thing. Lanier argues that coaching a mannequin must be a protected exercise however that the output generated by a mannequin can infringe on somebody’s copyright.

This distinction is enticing for a number of causes. First, present copyright legislation protects “transformative use.” You don’t have to grasp a lot about AI to comprehend {that a} mannequin is transformative. Studying concerning the lawsuits reaching the courts, we typically have the sensation that authors consider that their works are one way or the other hidden contained in the mannequin, that George R. R. Martin thinks that if he searched by way of the trillion or so parameters of GPT-4, he’d discover the textual content to his novels. He’s welcome to attempt, and he received’t succeed. (OpenAI received’t give him the GPT fashions, however he can obtain the mannequin for Meta’s Llama 2 and have at it.) This fallacy was most likely inspired by one other New Yorker article arguing that an LLM is sort of a compressed model of the online. That’s a pleasant picture, however it’s basically incorrect. What’s contained within the mannequin is a gigantic set of parameters primarily based on all of the content material that has been ingested throughout coaching, that represents the likelihood that one phrase is more likely to comply with one other. A mannequin isn’t a replica or a replica, in entire or partially, lossy or lossless, of the info it’s skilled on; it’s the potential for creating new and completely different content material. AI fashions are likelihood engines; an LLM computes the following phrase that’s most definitely to comply with the immediate, then the following phrase most definitely to comply with that, and so forth. The power to emit a sonnet that Shakespeare by no means wrote: that’s transformative, even when the brand new sonnet isn’t superb.

Lanier’s argument is that constructing a greater mannequin is a public good, that the world will likely be a greater place if now we have computer systems that may work straight with human language, and that higher fashions serve us all—even the authors whose works are used to coach the mannequin. I can ask a imprecise, poorly shaped query like “Wherein twenty first century novel do two ladies journey to Parchman jail to select up certainly one of their husbands who’s being launched,” and get the reply “Sing, Unburied, Sing by Jesmyn Ward.” (Extremely advisable, BTW, and I hope this point out generates a couple of gross sales for her.) I can even ask for a studying listing about plagues in sixteenth century England, algorithms for testing prime numbers, or the rest. Any of those prompts would possibly generate e book gross sales—however whether or not or not gross sales outcome, they are going to have expanded my data. Fashions which can be skilled on all kinds of sources are a great; that good is transformative and must be protected.

The issue with Lanier’s idea of information dignity is that, given the present cutting-edge in AI fashions, it’s inconceivable to differentiate meaningfully between “coaching” and “producing output.” Lanier acknowledges that drawback in his criticism of the present technology of “black field” AI, by which it’s inconceivable to attach the output to the coaching inputs on which the output was primarily based. He asks, “Why don’t bits come connected to the tales of their origins?,” mentioning that this drawback has been with us for the reason that starting of the online. Fashions are skilled by giving them smaller bits of enter and asking them to foretell the following phrase billions of instances; tweaking the mannequin’s parameters barely to enhance the predictions; and repeating that course of hundreds, if not thousands and thousands, of instances. The identical course of is used to generate output, and it’s necessary to grasp why that course of makes copyright problematic. If you happen to give a mannequin a immediate about Shakespeare, it’d decide that the output ought to begin with the phrase “To.” On condition that it has already chosen “To,” there’s a barely increased likelihood that the following phrase within the output will likely be “be.” On condition that, there’s an excellent barely increased likelihood that the following phrase will likely be “or.” And so forth. From this standpoint, it’s laborious to say that the mannequin is copying the textual content. It’s simply following possibilities—a “stochastic parrot.” It’s extra like monkeys typing randomly at keyboards than a human plagiarizing a literary textual content—however these are extremely skilled, probabilistic monkeys that really have an opportunity at reproducing the works of Shakespeare.

An necessary consequence of this course of is that it’s not potential to attach the output again to the coaching knowledge. The place did the phrase “or” come from? Sure, it occurs to be the following phrase in Hamlet’s well-known soliloquy; however the mannequin wasn’t copying Hamlet, it simply picked “or” out of the a whole bunch of hundreds of phrases it may have chosen, on the idea of statistics. It isn’t being inventive in any means we as people would acknowledge. It’s maximizing the likelihood that we (people) will understand the output it generates as a legitimate response to the immediate.

We consider that authors must be compensated for using their work—not within the creation of the mannequin, however when the mannequin produces their work as output. Is it potential? For a corporation like O’Reilly Media, a associated query comes into play. Is it potential to differentiate between inventive output (“Write within the type of Jesmyn Ward”) and actionable output (“Write a program that converts between present costs of currencies and altcoins”)? The response to the primary query is likely to be the beginning of a brand new novel—which is likely to be considerably completely different from something Ward wrote, and which doesn’t devalue her work any greater than her second, third, or fourth novels devalue her first novel. People copy one another’s type on a regular basis! That’s why English type post-Hemingway is so distinctive from the type of nineteenth century authors, and an AI-generated homage to an creator would possibly truly enhance the worth of the unique work, a lot as human “fan-fic” encourages quite than detracts from the recognition of the unique.

The response to the second query is a chunk of software program that might take the place of one thing a earlier creator has written and revealed on GitHub. It may substitute for that software program, probably chopping into the programmer’s income. However even these two circumstances aren’t as completely different as they first seem. Authors of “literary” fiction are protected, however what about actors or screenwriters whose work might be ingested by a mannequin and reworked into new roles or scripts? There are 175 Nancy Drew books, all “authored” by the nonexistent Carolyn Keene however written by a protracted chain of ghostwriters. Sooner or later, AIs could also be included amongst these ghostwriters. How will we account for the work of authors—of novels, screenplays, or software program—to allow them to be compensated for his or her contributions? What concerning the authors who train their readers the best way to grasp an advanced expertise subject? The output of a mannequin that reproduces their work gives a direct substitute quite than a transformative use that could be complementary to the unique.

It is probably not potential when you use a generative mannequin configured as a chat server by itself. However that isn’t the tip of the story. Within the 12 months or so since ChatGPT’s launch, builders have been constructing functions on high of the state-of-the-art basis fashions. There are numerous alternative ways to construct functions, however one sample has grow to be outstanding: retrieval-augmented technology, or RAG. RAG is used to construct functions that “learn about” content material that isn’t within the mannequin’s coaching knowledge. For instance, you would possibly wish to write a stockholders’ report or generate textual content for a product catalog. Your organization has all the info you want—however your organization’s financials clearly weren’t in ChatGPT’s coaching knowledge. RAG takes your immediate, hundreds paperwork in your organization’s archive which can be related, packages every part collectively, and sends the immediate to the mannequin. It might embody directions like “Solely use the info included with this immediate within the response.” (This can be an excessive amount of info, however this course of usually works by producing “embeddings” for the corporate’s documentation, storing these embeddings in a vector database, and retrieving the paperwork which have embeddings much like the person’s authentic query. Embeddings have the necessary property that they replicate relationships between phrases and texts. They make it potential to seek for related or comparable paperwork.)

Whereas RAG was initially conceived as a technique to give a mannequin proprietary info with out going by way of the labor- and compute-intensive course of of coaching, in doing so it creates a connection between the mannequin’s response and the paperwork from which the response was created. The response is not constructed from random phrases and phrases which can be indifferent from their sources. We’ve got provenance. Whereas it nonetheless could also be tough to guage the contribution of the completely different sources (23% from A, 42% from B, 35% from C), and whereas we will anticipate numerous pure language “glue” to have come from the mannequin itself, we’ve taken a giant step ahead towards Lanier’s knowledge dignity. We’ve created traceability the place we beforehand had solely a black field. If we revealed somebody’s foreign money conversion software program in a e book or coaching course and our language mannequin reproduces it in response to a query, we will attribute that to the unique supply and allocate royalties appropriately. The identical would apply to new novels within the type of Jesmyn Ward or, maybe extra appropriately, to the never-named creators of pulp fiction and screenplays.

Google’s “AI-powered overview” characteristic2 is an effective instance of what we will anticipate with RAG. We are able to’t say for sure that it was applied with RAG, but it surely clearly follows the sample. Google, which invented Transformers, is aware of higher than anybody that Transformer-based fashions destroy metadata until you do numerous particular engineering. However Google has the most effective search engine on the earth. Given a search string, it’s easy for Google to carry out the search, take the highest few outcomes, after which ship them to a language mannequin for summarization. It depends on the mannequin for language and grammar however derives the content material from the paperwork included within the immediate. That course of may give precisely the outcomes proven beneath: a abstract of the search outcomes, with down arrows you could open to see the sources from which the abstract was generated. Whether or not this characteristic improves the search expertise is an effective query: whereas an person can hint the abstract again to its supply, it locations the supply two steps away from the abstract. It’s a must to click on the down arrow, then click on on the supply to get to the unique doc. Nevertheless, that design problem isn’t germane to this dialogue. What’s necessary is that RAG (or one thing like RAG) has enabled one thing that wasn’t potential earlier than: we will now hint the sources of an AI system’s output.

Now that we all know that it’s potential to supply output that respects copyright and, if acceptable, compensates the creator, it’s as much as regulators to carry firms accountable for failing to take action, simply as they’re held accountable for hate speech and different types of inappropriate content material. We must always not purchase into the assertion of the big LLM suppliers that that is an inconceivable job. It’s yet one more of the numerous enterprise fashions and moral challenges that they have to overcome.

The RAG sample has different benefits. We’re all conversant in the flexibility of language fashions to “hallucinate,” to make up information that usually sound very convincing. We always must remind ourselves that AI is barely enjoying a statistical sport, and that its prediction of the most definitely response to any immediate is usually incorrect. It doesn’t know that it’s answering a query, nor does it perceive the distinction between information and fiction. Nevertheless, when your software provides the mannequin with the info wanted to assemble a response, the likelihood of hallucination goes down. It doesn’t go to zero, however it’s considerably decrease than when a mannequin creates a response primarily based purely on its coaching knowledge. Limiting an AI to sources which can be identified to be correct makes the AI’s output extra correct.

We’ve solely seen the beginnings of what’s potential. The easy RAG sample, with one immediate orchestrator, one content material database, and one language mannequin, will little question grow to be extra advanced. We are going to quickly see (if we haven’t already) techniques that take enter from a person, generate a sequence of prompts (probably for various fashions), mix the outcomes into a brand new immediate, which is then despatched to a special mannequin. You possibly can already see this taking place within the newest iteration of GPT-4: if you ship a immediate asking GPT-4 to generate an image, it processes that immediate, then sends the outcomes (most likely together with different directions) to DALL-E for picture technology. Simon Willison has famous that if the immediate contains a picture, GPT-4 by no means sends that picture to DALL-E; it converts the picture right into a immediate, which is then despatched to DALL-E with a modified model of your authentic immediate. Tracing provenance with these extra advanced techniques will likely be tough—however with RAG, we now have the instruments to do it.

AI at O’Reilly Media

We’re experimenting with quite a lot of RAG-inspired concepts on the O’Reilly studying platform. The primary extends Solutions, our AI-based search instrument that makes use of pure language queries to seek out particular solutions in our huge corpus of programs, books, and movies. On this subsequent model, we’re putting Solutions straight throughout the studying context and utilizing an LLM to generate content-specific questions concerning the materials to boost your understanding of the subject.

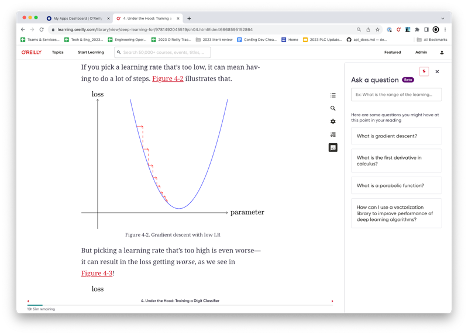

For instance, when you’re studying about gradient descent, the brand new model of Solutions will generate a set of associated questions, akin to the best way to compute a by-product or use a vector library to extend efficiency. On this occasion, RAG is used to determine key ideas and supply hyperlinks to different sources within the corpus that can deepen the educational expertise.

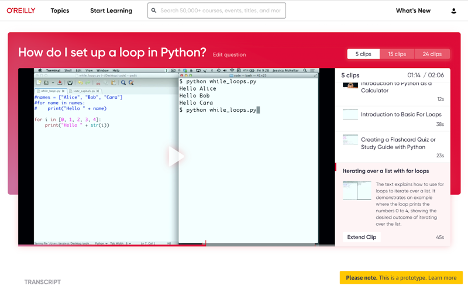

Our second challenge is geared towards making our long-form video programs less complicated to browse. Working with our mates at Design Programs Worldwide, we’re growing a characteristic known as “Ask this course,” which is able to help you “distill” a course into simply the query you’ve requested. Whereas conceptually much like Solutions, the thought of “Ask this course” is to create a brand new expertise throughout the content material itself quite than simply linking out to associated sources. We use a LLM to supply part titles and a abstract to sew collectively disparate snippets of content material right into a extra cohesive narrative.

Footnotes