In case you missed it, 2,569 inside paperwork associated to inside providers at Google leaked.

A search marketer named Erfan Amizi introduced them to Rand Fishkin’s consideration, and we analyzed them.

Pandemonium ensued.

As you may think, it’s been a loopy 48 hours for us all and I’ve fully failed at being on trip.

Naturally, some portion of the search engine optimization neighborhood has rapidly fallen into the usual concern, uncertainty and doubt spiral.

Reconciling new info may be troublesome and our cognitive biases can stand in the best way.

It’s priceless to debate this additional and provide clarification so we will use what we’ve realized extra productively.

In any case, these paperwork are the clearest take a look at how Google really considers options of pages that we have now needed to date.

On this article, I wish to try to be extra explicitly clear, reply frequent questions, critiques, and issues and spotlight extra actionable findings.

Lastly, I wish to provide you with a glimpse into how we might be utilizing this info to do cutting-edge work for our shoppers. The hope is that we will collectively provide you with the very best methods to replace our greatest practices primarily based on what we’ve realized.

Reactions to the leak: My ideas on frequent criticisms

Let’s begin by addressing what folks have been saying in response to our findings. I’m not a subtweeter, so that is to all of y’all and I say this with love. 😆

‘We already knew all that’

No, largely, you didn’t.

Usually talking, the search engine optimization neighborhood has operated primarily based on a sequence of finest practices derived from research-minded folks from the late Nineties and early 2000s.

As an example, we’ve held the web page title in such excessive regard for therefore lengthy as a result of early search engines like google weren’t full-text and solely listed the web page titles.

These practices have been reluctantly up to date primarily based on info from Google, search engine optimization software program firms, and insights from the neighborhood. There have been quite a few gaps that you just stuffed with your personal hypothesis and anecdotal proof out of your experiences.

In the event you’re extra superior, you capitalized on short-term edge circumstances and exploits, however you by no means knew precisely the depth of what Google considers when it computes its rankings.

You additionally didn’t know most of its named techniques, so you wouldn’t have been capable of interpret a lot of what you see in these paperwork. So, you searched these paperwork for the issues that you just do perceive and also you concluded that you realize every thing right here.

That’s the very definition of affirmation bias.

In actuality, there are numerous options in these paperwork that none of us knew.

Similar to the 2006 AOL search knowledge leak and the Yandex leak, there might be worth captured from these paperwork for years to return. Most significantly, you additionally simply bought precise affirmation that Google makes use of options that you just might need suspected. There’s worth in that if solely to behave as proof if you find yourself making an attempt to get one thing carried out along with your shoppers.

Lastly, we now have a greater sense of inside terminology. A method Google spokespeople evade rationalization is thru language ambiguity. We are actually higher armed to ask the appropriate questions and cease residing on the abstraction layer.

‘We must always simply give attention to clients and never the leak’

Positive. As an early and continued proponent of market segmentation in search engine optimization, I clearly assume we ought to be specializing in our clients.

But we will’t deny that we reside in a actuality the place many of the internet has conformed to Google to drive site visitors.

We function in a channel that’s thought of a black field. Our clients ask us questions that we frequently reply to with “it relies upon.”

I’m of the mindset that there’s worth in having an atomic understanding of what we’re working with so we will clarify what it is determined by. That helps with constructing belief and getting buy-in to execute on the work that we do.

Mastering our channel is in service of our give attention to our clients.

‘The leak isn’t actual’

Skepticism in search engine optimization is wholesome. Finally, you’ll be able to resolve to consider no matter you need, however right here’s the truth of the scenario:

- Erfan had his Xoogler supply authenticate the documentation.

- Rand labored by way of his personal authentication course of.

- I additionally authenticated the documentation individually by way of my very own community and backchannel sources.

I can say with absolute confidence that the leak is actual and has been definitively verified in a number of methods together with by way of insights from folks with deeper entry to Google’s techniques.

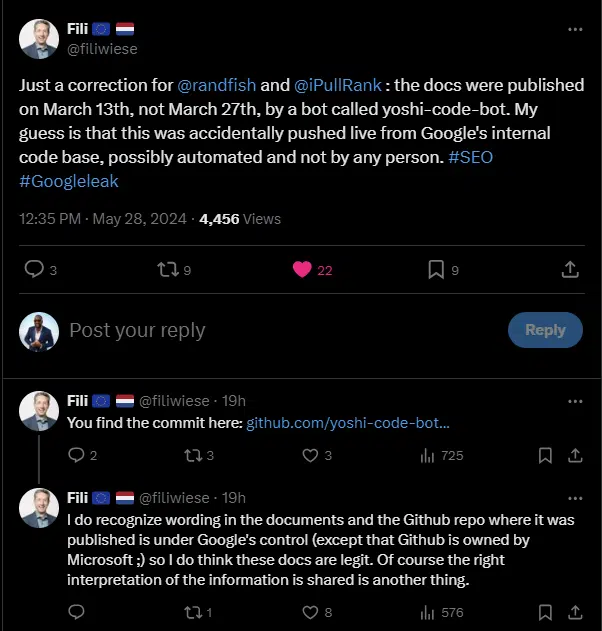

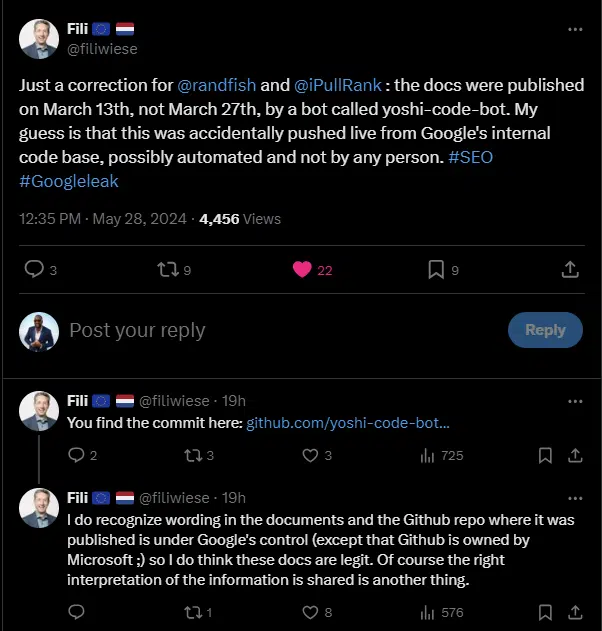

Along with my very own sources, Xoogler Fili Wiese supplied his perception on X. Word that I’ve included his name out despite the fact that he vaguely sprinkled some doubt on my interpretations with out providing every other info. However that’s a Xoogler for you, amiright? 😆

Lastly, the documentation references particular inside rating techniques that solely Googlers find out about. I touched on a few of these techniques and cross-referenced their capabilities with element from a Google engineer’s resume.

Oh, and Google simply verified it in a press release as I used to be placing my ultimate edits on this.

“It is a Nothingburger”

Little question.

I’ll see you on web page 2 of the SERPs whereas I’m having mine medium with cheese, mayo, ketchup and mustard.

“It doesn’t say CTR so it’s not getting used”

So, let me get this straight, you assume a marvel of contemporary know-how that computes an array of knowledge factors throughout 1000’s of computer systems to generate and show outcomes from tens of billions of pages in 1 / 4 of a second that shops each clicks and impressions as options is incapable of performing fundamental division on the fly?

… OK.

“Watch out with drawing conclusions from this info”

I agree with this. All of us have the potential to be fallacious in our interpretation right here attributable to the caveats that I highlighted.

To that finish, we should always take measured approaches in growing and testing hypotheses primarily based on this knowledge.

The conclusions I’ve drawn are primarily based on my analysis into Google and precedents in Info Retrieval, however like I stated it’s totally doable that my conclusions aren’t completely right.

“The leak is to cease us from speaking about AI Overviews”

No.

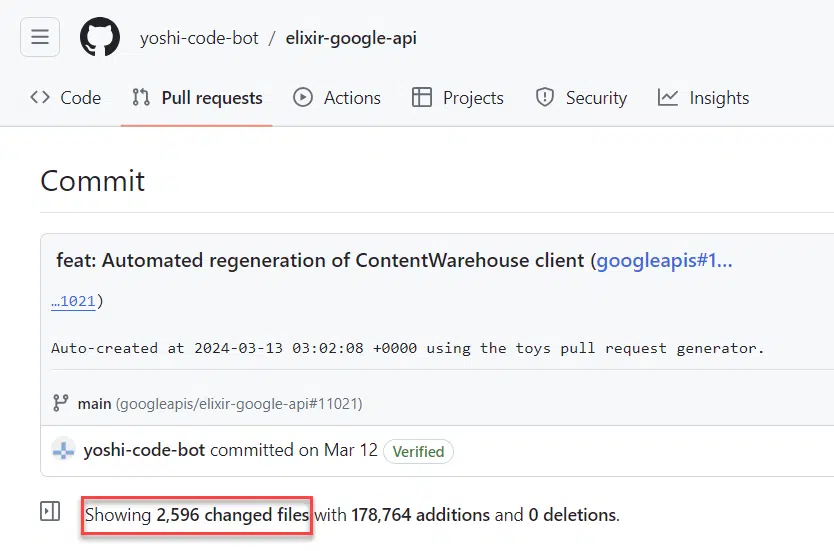

The misconfigured documentation deployment occurred in March. There’s some proof that this has been occurring in different languages (sans feedback) for 2 years.

The paperwork have been found in Could. Had somebody found it sooner, it will have been shared sooner.

The timing of AI Overviews has nothing to do with it. Minimize it out.

“We don’t know the way outdated it’s”

That is immaterial. Based mostly on dates within the information, we all know it’s no less than newer than August 2023.

We all know that commits to the repository occur commonly, presumably as a operate of code being up to date. We all know that a lot of the docs haven’t modified in subsequent deployments.

We additionally know that when this code was deployed, it featured precisely the two,596 information we have now been reviewing and plenty of of these information weren’t beforehand within the repository. Until whoever/no matter did the git push did so with old-fashioned code, this was the newest model on the time.

The documentation has different markers of recency, like references to LLMs and generative options, which means that it’s no less than from the previous yr.

Both manner it has extra element than we have now ever gotten earlier than and greater than recent sufficient for our consideration.

“This all isn’t associated to look”

That’s right. I indicated as a lot in my earlier article.

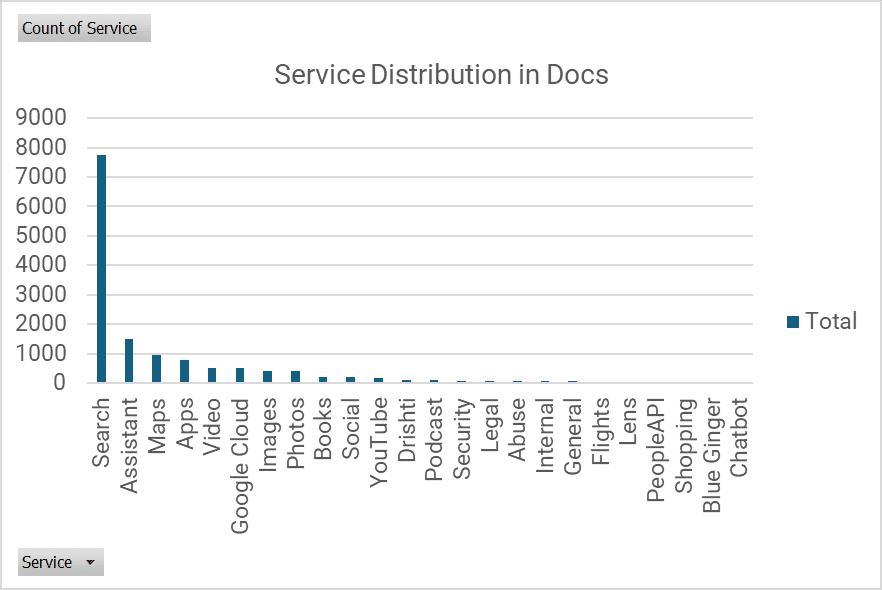

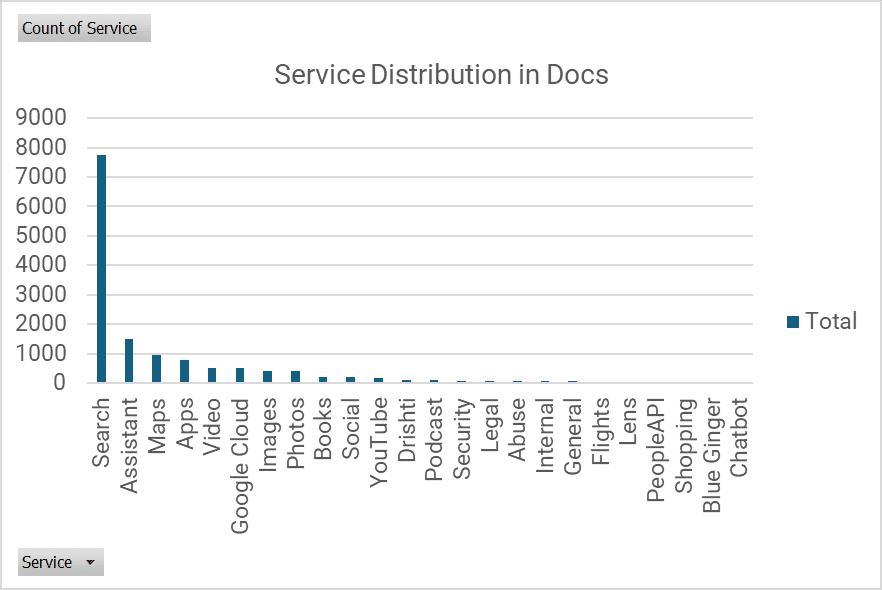

What I didn’t do was section the modules into their respective service. I took the time to try this now.

Right here’s a fast and soiled classification of the options broadly categorized by service primarily based on ModuleName:

Of the 14,000 options, roughly 8,000 are associated to Search.

“It’s only a listing of variables”

Positive.

It’s a listing of variables with descriptions that offers you a way of the extent of granularity Google makes use of to grasp and course of the net.

In the event you care about rating elements this documentation is Christmas, Hanukkah, Kwanzaa and Festivus.

“It’s a conspiracy! You buried [thing I’m interested in]”

Why would I bury one thing after which encourage folks to go take a look at the paperwork themselves and write about their very own findings?

Make it make sense.

“This received’t change something about how I do search engine optimization”

It is a alternative and, maybe, a operate of me purposely not being prescriptive with how I offered the findings.

What we’ve realized ought to no less than improve your method to search engine optimization strategically in a number of significant methods and may positively change it tactically. I’ll focus on that under.

FAQs concerning the leaked docs

I’ve been requested plenty of questions up to now 48 hours so I believe it’s priceless to memorialize the solutions right here.

What have been probably the most fascinating belongings you discovered?

It’s all very fascinating to me, however right here’s a discovering that I didn’t embody within the authentic article:

Google can specify a restrict of outcomes per content material kind.

In different phrases, they’ll specify solely X variety of weblog posts or Y variety of information articles can seem for a given SERP.

Having a way of those variety limits might assist us resolve which content material codecs to create once we are deciding on key phrases to focus on.

As an example, if we all know that the restrict is three for weblog posts and we don’t assume we will outrank any of them, then perhaps a video is a extra viable format for that key phrase.

What ought to we take away from this leak?

Search has many layers of complexity. Regardless that we have now a broader view into issues we don’t know which parts of the rating techniques set off or why.

We now have extra readability on the alerts and their nuances.

What are the implications for native search?

Andrew Shotland is the authority on that. He and his group at LocalSEOGuide have begun to dig into issues from that perspective.

What are the implications for YouTube Search?

I’ve not dug into that, however there are 23 modules with YouTube prefixes.

Somebody ought to positively do and interpretation of it.

How does this affect the (_______) house?

The straightforward reply is, it’s exhausting to know.

An concept that I wish to proceed to drill house is that Google’s scoring capabilities behave in a different way relying in your question and context. Given the proof we see in how the SERPs operate, there are totally different rating techniques that activate for various verticals.

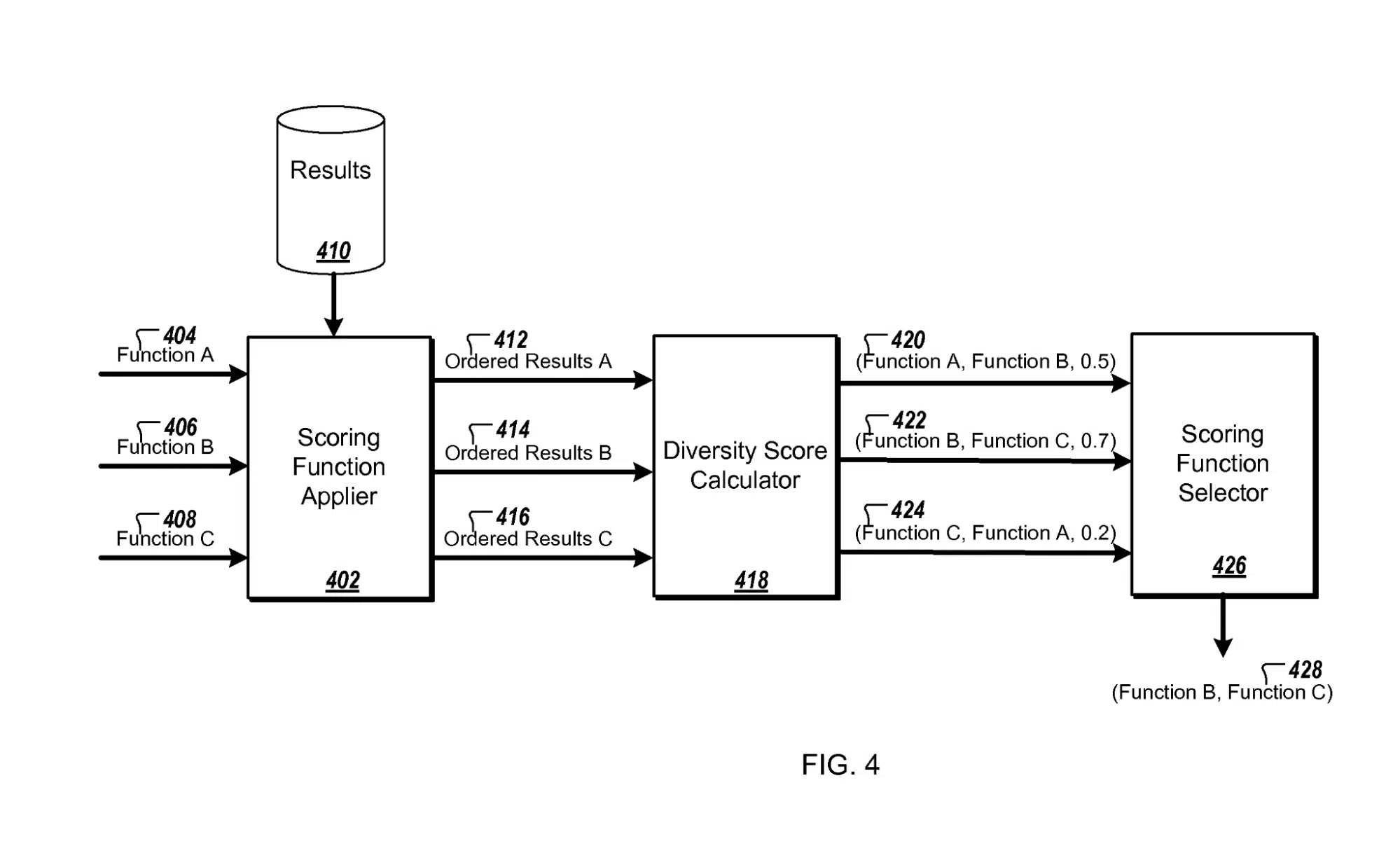

As an example this level, the Framework for evaluating internet search scoring capabilities patent reveals that Google has the aptitude to run a number of scoring capabilities concurrently and resolve which end result set to make use of as soon as the info is returned.

Whereas we have now most of the options that Google is storing, we do not need sufficient details about the downstream processes to know precisely what is going to occur for any given house.

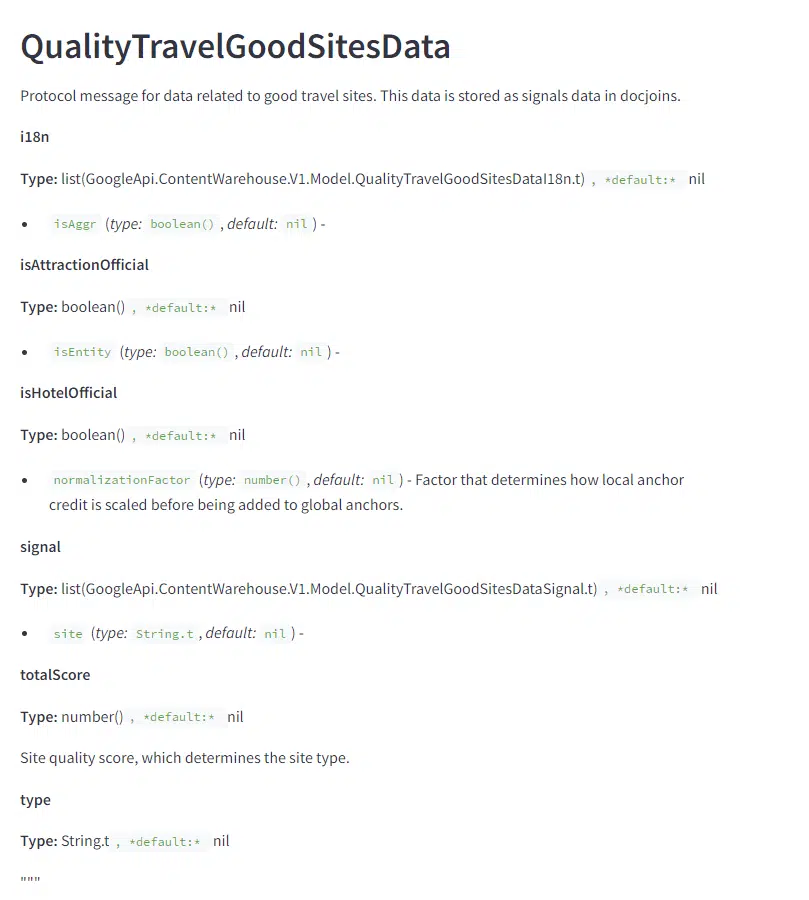

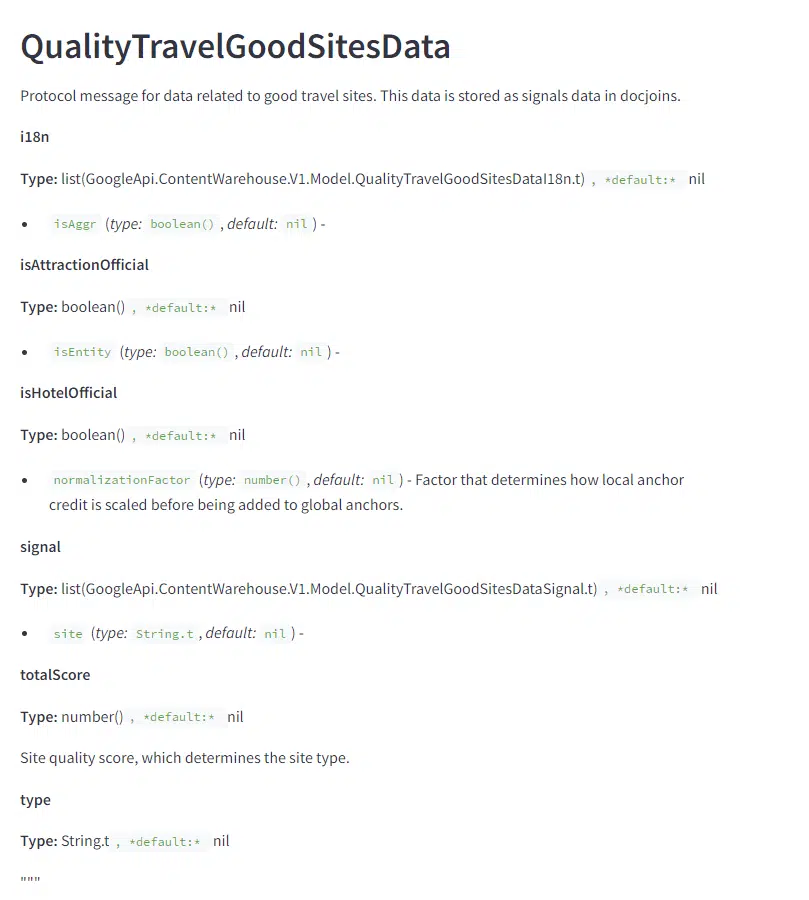

That stated, there are some indicators of how Google accounts for some areas like Journey.

The QualityTravelGoodSitesData module has options that determine and rating journey websites, presumably to provide them a Increase over non-official websites.

Do you actually assume Google is purposely torching small websites?

I don’t know.

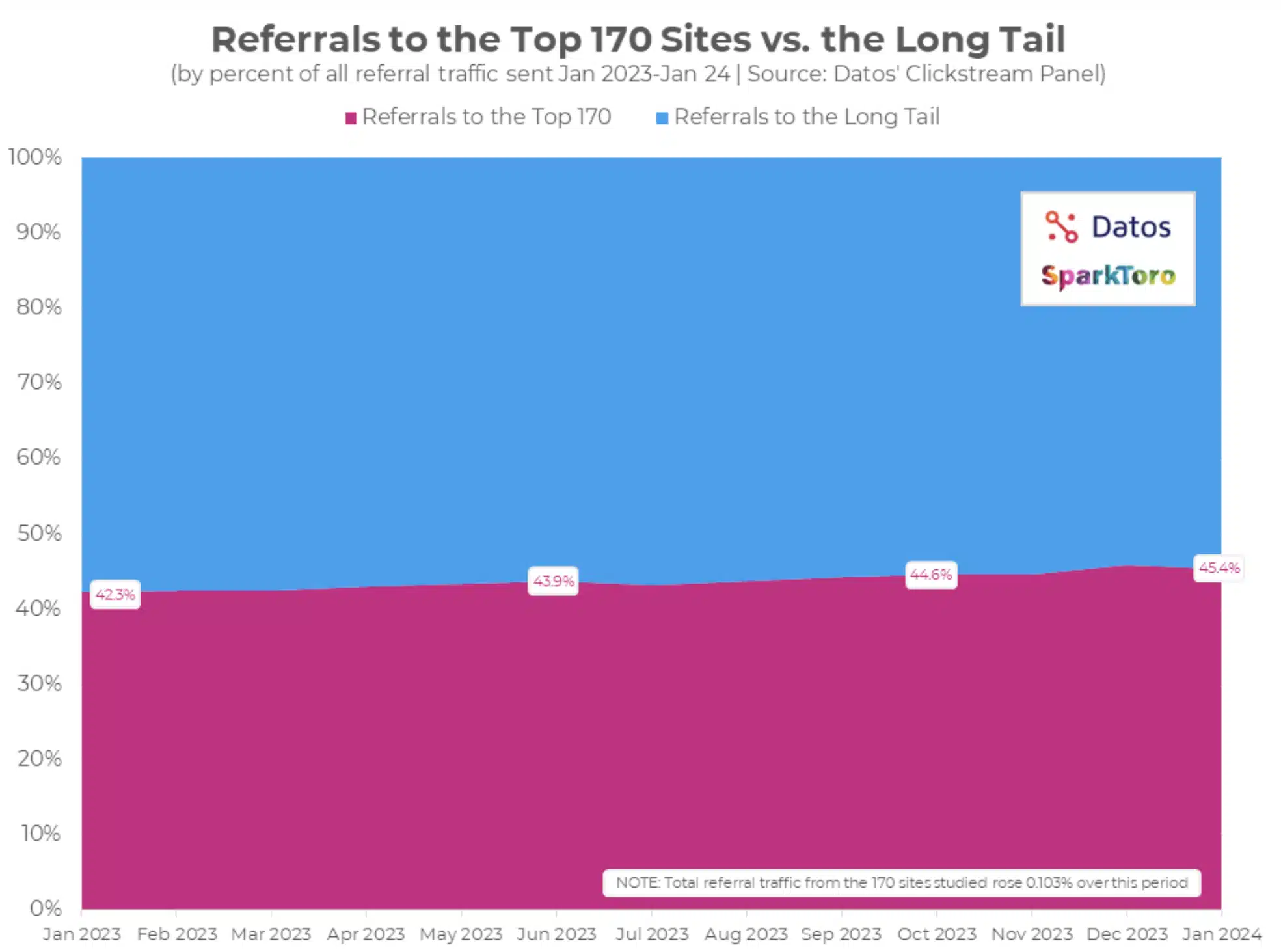

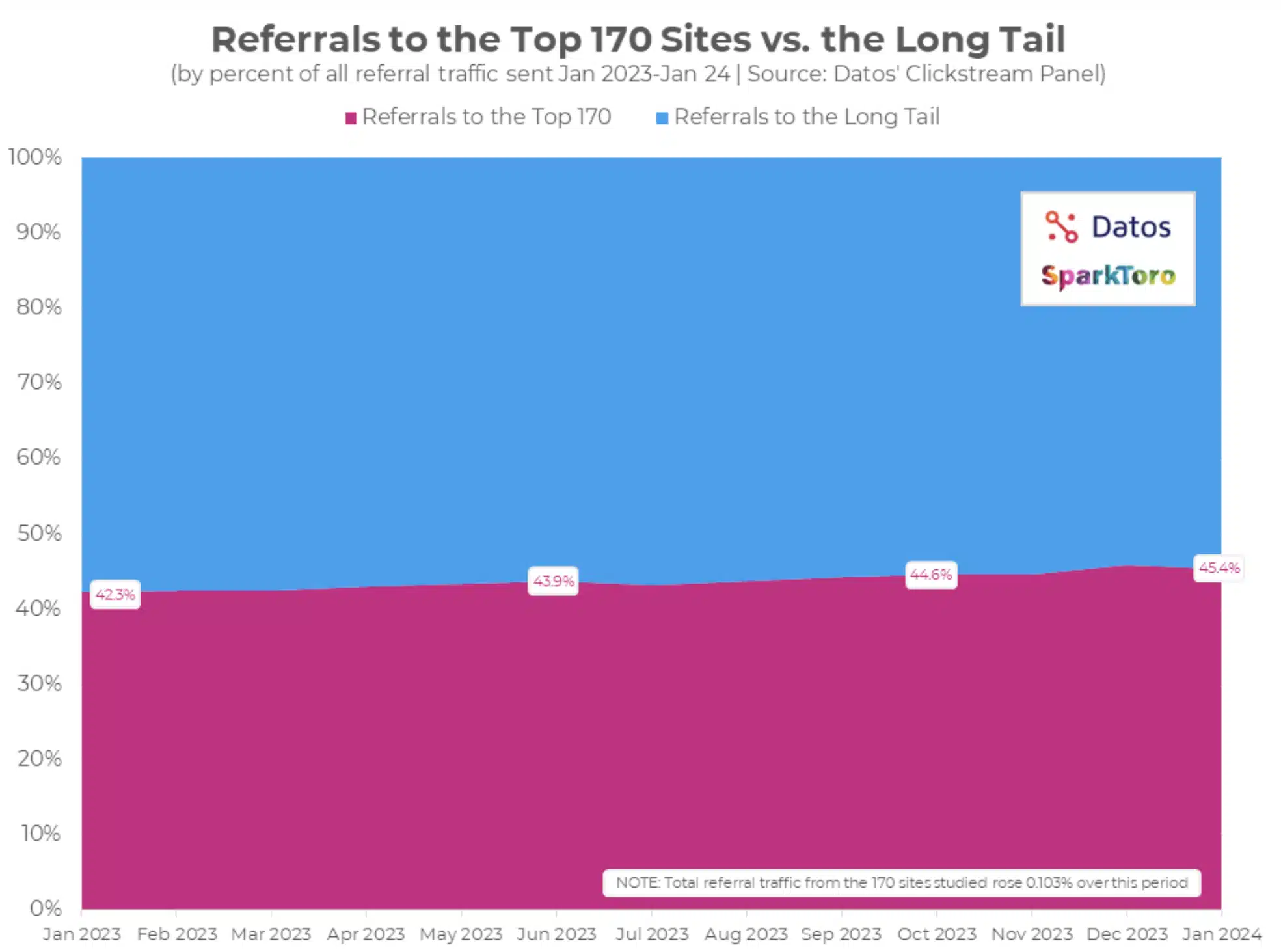

I additionally don’t know precisely how smallPersonalSite is outlined or used, however I do know that there’s a lot of proof of small websites dropping most of their site visitors and Google is sending much less site visitors to the lengthy tail of the net.

That’s impacting the livelihood of small companies. And their outcry appears to have fallen on deaf ears.

Indicators like hyperlinks and clicks inherently help large manufacturers. These websites naturally appeal to extra hyperlinks and customers are extra compelled to click on on manufacturers they acknowledge.

Massive manufacturers may also afford businesses like mine and extra refined tooling for content material engineering in order that they exhibit higher relevance alerts.

It’s a self-fulfilling prophecy and it turns into more and more troublesome for small websites to compete in natural search.

If the websites in query could be thought of “small private websites” then Google ought to give them a preventing probability with a Increase that offsets the unfair benefit large manufacturers have.

Do you assume Googlers are unhealthy folks?

I don’t.

I believe they typically are well-meaning people that do the exhausting job of supporting many individuals primarily based on a product that they’ve little affect over and is troublesome to elucidate.

In addition they work in a public multinational group with many constraints. The knowledge disparity creates an influence dynamic between them and the search engine optimization neighborhood.

Googlers might, nonetheless, dramatically enhance their reputations and credibility amongst entrepreneurs and journalists by saying “no remark” extra usually slightly than offering deceptive, patronizing or belittling responses just like the one they made about this leak.

Though it’s value noting that the PR respondent Davis Thompson has been doing comms for Seek for simply the final two months and I’m certain he’s exhausted.

Is there something associated to AI Overviews?

I used to be not capable of finding something immediately associated to SGE/AIO, however I’ve already offered plenty of readability on how that works.

I did discover a number of coverage options for LLMs. This implies that Google determines what content material can or can’t be used from the Data Graph with LLMs.

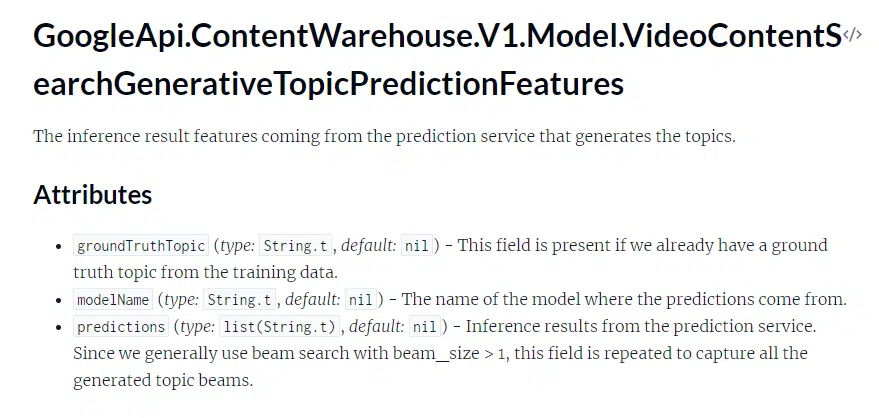

Is there something associated to generative AI?

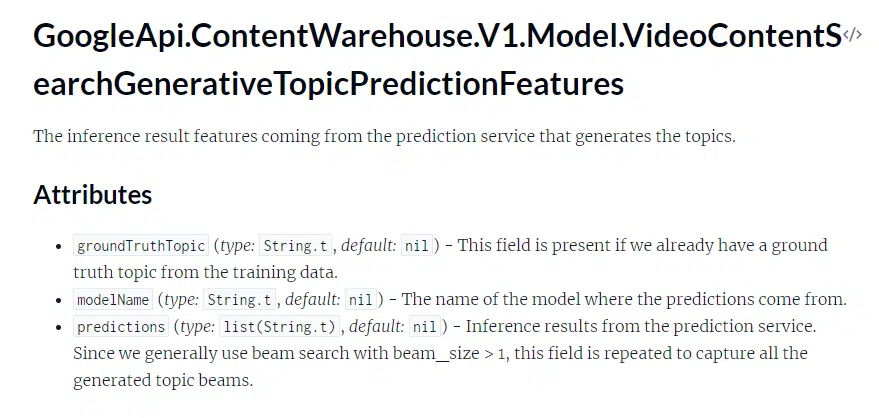

There’s something associated to video content material. Based mostly on the write-ups related to the attributes, I believe that they use LLMs to foretell the subjects of movies.

New discoveries from the leak

Some conversations I’ve had and noticed over the previous two days has helped me recontextualize my findings – and likewise dig for extra issues within the documentation.

Child Panda isn’t HCU

Somebody with information of Google’s inside techniques was capable of reply that the Child Panda references an older system and isn’t the Useful Content material Replace.

I, nonetheless, stand by my speculation that HCU displays comparable properties to Panda and it seemingly requires comparable options to enhance for restoration.

A worthwhile experiment could be making an attempt to recuperate site visitors to a web site hit by HCU by systematically bettering click on alerts and hyperlinks to see if it really works. If somebody with a web site that’s been struck needs to volunteer as tribute, I’ve a speculation that I’d like to check on how one can recuperate.

The leaks technically return two years

Derek Perkins and @SemanticEntity dropped at my consideration on Twitter that the leaks have been obtainable throughout languages in Google’s shopper libraries for Java, Ruby, and PHP.

The distinction with these is that there’s very restricted documentation within the code.

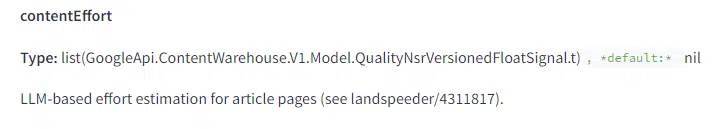

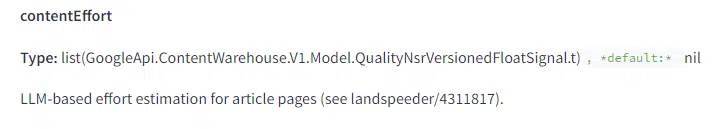

There’s a content material effort rating perhaps for generative AI content material

Google is making an attempt to find out the quantity of effort employed when creating content material. Based mostly on the definition, we don’t know if all content material is scored this fashion by an LLM, or whether it is simply content material that they think is constructed utilizing generative AI.

However, it is a measure you’ll be able to enhance by way of content material engineering.

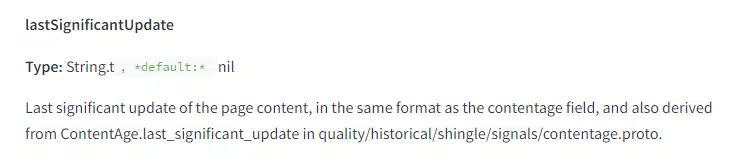

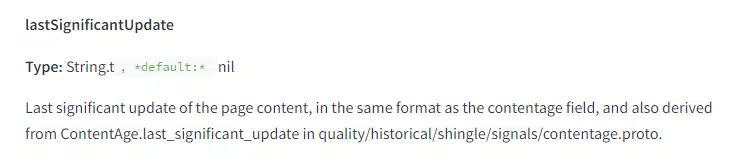

The importance of web page updates is measured

The importance of a web page replace impacts how usually a web page is crawled and probably listed. Beforehand, you would merely change the dates in your web page and it signaled freshness to Google, however this characteristic means that Google expects extra important updates to the web page.

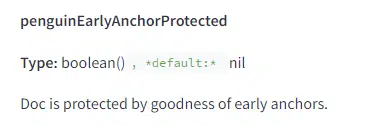

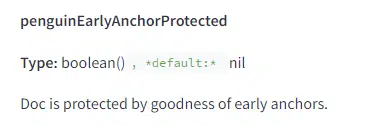

Pages are protected primarily based on earlier hyperlinks in Penguin

Based on the outline of this characteristic, Penguin had pages that have been thought of protected primarily based on the historical past of their hyperlink profile.

This, mixed with the hyperlink velocity alerts, might clarify why Google is adamant that destructive search engine optimization assaults with hyperlinks are ineffective.

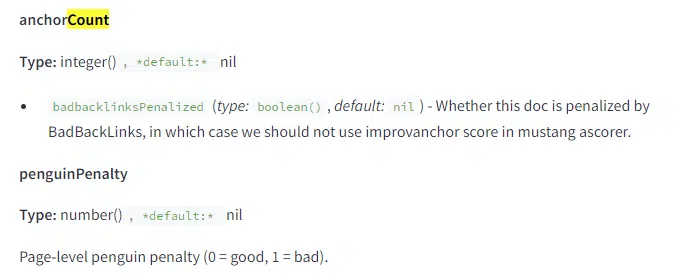

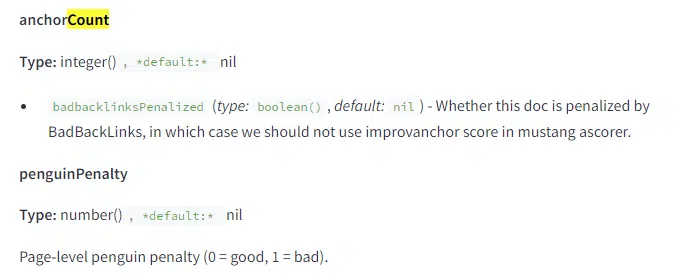

Poisonous backlinks are certainly a factor

We’ve heard that “poisonous backlinks” are an idea that merely used to promote search engine optimization software program. But there’s a badbacklinksPenalized characteristic related to paperwork.

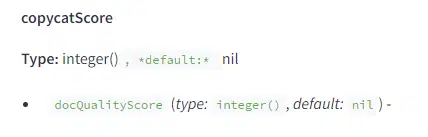

There’s a weblog copycat rating

Within the weblog BlogPerDocData module there’s a copycat rating and not using a definition, however is tied to the docQualityScore.

My assumption is that it’s a measure of duplication particularly for weblog posts.

Mentions matter quite a bit

Though I haven’t come throughout something suggesting that mentions are handled as hyperlinks, there are lot of mentions of mentions as they relate to entities.

This merely reinforces that leaning into entity-driven methods along with your content material is a worthwhile addition to your technique.

Googlebot is extra succesful than we thought

Googlebot’s fetching mechanism is able to extra than simply GET requests.

The documentation signifies that it could actually do POST, PUT, or PATCH requests as properly.

The workforce beforehand mentioned POST requests, however the different two HTTP verbs weren’t beforehand revealed. In the event you see some anomalous requests in your logs, this can be why.

Particular measures of ‘effort’ for UGC

We’ve lengthy believed that leveraging UGC is a scalable solution to get extra content material onto pages and enhance their relevance and freshness.

This ugcDiscussionEffortScore means that Google is measuring the standard of that content material individually from the core content material.

After we work with UGC-driven marketplaces and dialogue websites, we do plenty of content material technique work associated to prompting customers to say sure issues. That, mixed with heavy moderation of the content material, ought to be basic to bettering the visibility and efficiency of these websites.

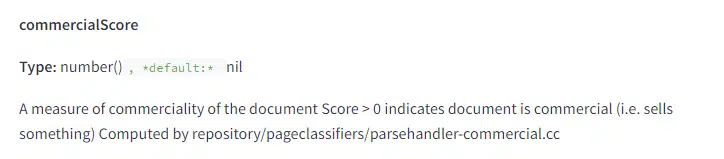

Google detects how industrial a web page is

We all know that intent is a heavy part of Search, however we solely have measures of this on the key phrase facet of the equation.

Google scores paperwork this fashion as properly and this can be utilized to cease a web page from being thought of for a question with informational intent.

We’ve labored with shoppers who actively experimented with consolidating informational and transactional web page content material, with the objective of bettering visibility for each sorts of phrases. This labored to various levels, nevertheless it’s fascinating to see the rating successfully thought of a binary primarily based on this description.

Cool issues I’ve seen folks do with the leaked docs

I’m fairly excited to see how the documentation is reverberating throughout the house.

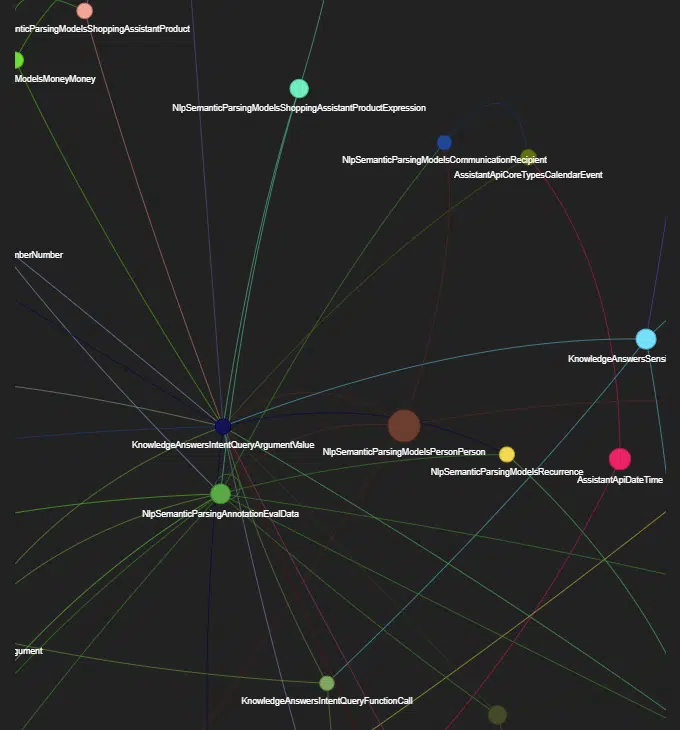

Natzir’s Google’s Rating Options Modules Relations: Natzir builds a community graph visualization software in Streamlit that reveals the relationships between modules.

WordLift’s Google Leak Reporting Device: Andrea Volpini constructed a Streamlit app that permits you to ask customized questions concerning the paperwork to get a report.

Course on the right way to transfer ahead in search engine optimization

The ability is within the crowd and the search engine optimization neighborhood is a world workforce.

I don’t count on us to all agree on every thing I’ve reviewed and found, however we’re at our greatest once we construct on our collective experience.

Listed here are some issues that I believe are value doing.

The best way to learn the paperwork

In the event you haven’t had the possibility to dig into the documentation on HexDocs otherwise you’ve tried and don’t know right here to start out, fear not, I’ve bought you lined.

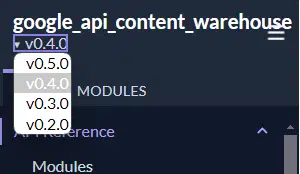

- Begin from the basis: This options listings of all of the modules with some descriptions. In some circumstances attributes from the module are being displayed.

- Be sure to’re trying on the proper model: v0.5.0 Is the patched model The variations previous to which have docs we’ve been discussing.

- Scroll down till you discover a module that sounds fascinating to you: I centered on parts associated to look, however it’s possible you’ll be thinking about Assistant, YouTube, and so on.

- Learn by way of the attributes: As you learn by way of the descriptions of options, be aware of different options referenced in them.

- Search: Carry out searches for these phrases within the docs.

- Repeat till you’re performed: Return to step 1. As you study extra, you’ll discover different belongings you wish to search and also you’ll notice sure strings may imply there are different modules that curiosity you.

- Share your findings: In the event you discover one thing cool, share it on social or write about it. I’m completely happy that can assist you amplify.

One factor that annoys me about HexDocs is how the left sidebar covers many of the names of the modules. This makes it troublesome to know what you’re navigating to.

In the event you don’t wish to mess with the CSS, I’ve made a easy Chrome extension that you would be able to set up to make the sidebar greater.

How your method to search engine optimization ought to change strategically

Listed here are some strategic issues that you must extra critically take into account as a part of your search engine optimization efforts.

In the event you are already doing all these items, you have been proper, you do know every thing, and I salute you. 🫡

search engine optimization and UX have to work extra carefully collectively

With NavBoost, Google is valuing clicks as one of the vital necessary options, however we have to perceive what session success means.

A search that yields a click on on a end result the place the person doesn’t carry out one other search could be a success even when they didn’t spend plenty of time on the location. That may point out that the person discovered what they have been searching for.

Naturally, a search that yields a click on and a person spends 5 minutes on a web page earlier than coming again to Google can be a hit. We have to create extra profitable periods.

search engine optimization is about driving folks to the web page, UX is about getting them to do what you need on the web page. We have to pay nearer consideration to how parts are structured and surfaced to get folks to the content material that they’re explicitly searching for and provides them a cause to remain on the location.

It’s not sufficient to cover what I’m searching for after a narrative about your grandma’s historical past of creating apple pies with hatchets (or no matter recipe websites are doing as of late). Reasonably, it ought to be extra about offering the precise info, clearly displaying it, and attractive the person to stay on the web page with one thing moreover compelling.

Pay extra consideration to click on metrics

We deal with Search Analytics knowledge as outcomes, however Google’s rating techniques deal with them as diagnostic options.

In the event you rank extremely and you’ve got a ton of impressions and no clicks (except for when SiteLinks throws the numbers off) you seemingly have an issue.

What we’re definitively studying is that there’s a threshold of expectation for efficiency primarily based on place. While you fall under that threshold you’ll be able to lose that place.

Content material must be extra centered

We’ve realized definitively that Google makes use of vector embeddings to find out how far off given a web page is from the remainder of what you speak about.

This means that it is going to be difficult to go far into higher funnel content material efficiently and not using a structured enlargement or with out authors who’ve demonstrated experience in that topic space.

Encourage your authors to domesticate experience in what they publish throughout the net and deal with their bylines just like the gold commonplace that it’s.

search engine optimization ought to all the time be experiment-driven

Because of the variability of the rating techniques, you can’t take finest practices at face worth for each house. You could check, study and construct experimentation in each search engine optimization program.

Giant websites leveraging merchandise like search engine optimization cut up testing software Searchpilot are already heading in the right direction, however even small websites ought to check how they construction and place their content material and metadata to encourage stronger click on metrics.

In different phrases, we have to actively check the SERP, not simply the location.

Take note of what occurs after they depart your web site

We now have verification that Google is utilizing knowledge from Chrome as a part of the search expertise. There’s worth in reviewing the clickstream knowledge from SimilarWeb and Semrush.

Tendencies present to see the place persons are going subsequent and how one can give them that info with out them leaving you.

Construct key phrase and content material technique round SERP format variety

Google probably limits the variety of pages of sure content material varieties rating within the SERP, so checking the SERPs ought to turn into a part of your key phrase analysis.

Don’t align codecs with key phrases if there’s no cheap chance of rating.

How your method to search engine optimization ought to change tactically

Tactically, listed here are some issues you’ll be able to take into account doing in a different way. Shout out to Rand as a result of a few these concepts are his.

Web page titles may be so long as you need

We now have additional proof that the 60-70 character restrict is a fantasy.

In my very own expertise we have now experimented with appending extra keyword-driven parts to the title and it has yielded extra clicks as a result of Google has extra to select from when it rewrites the title.

Use fewer authors on extra content material

Reasonably than utilizing an array of freelance authors, you must work with fewer which can be extra centered on material experience and likewise write for different publications.

Concentrate on hyperlink relevance from websites with site visitors

We’ve realized that hyperlink worth is increased from pages that prioritized increased within the index. Pages that get extra clicks are pages which can be more likely to seem in Google’s flash reminiscence.

We’ve additionally realized that Google extremely values relevance. We have to cease going after hyperlink quantity and solely give attention to relevance.

Default to originality as a substitute of lengthy type

We now know originality is measured in a number of methods and may yield a lift in efficiency.

Some queries merely don’t require a 5,000-word weblog put up (I do know, I do know). Concentrate on originality and layer extra info in your updates as rivals start to repeat you.

Be certain that all dates related to a web page are constant

It’s frequent for dates in schema to be out of sync with dates on the web page and dates within the XML sitemap. All of those should be synced to make sure Google has the very best understanding of how maintain the content material is.

As you refresh your decaying content material, make certain each date is aligned so Google will get a constant sign.

Use outdated domains with excessive care

In the event you’re trying to make use of an outdated area, it’s not sufficient to purchase it and slap your new content material on its outdated URLs. You could take a structured method to updating the content material to section out what Google has in its long-term reminiscence.

You might even wish to keep away from there being a switch of possession in registrars till you’ve systematically established the brand new content material.

Make gold-standard paperwork

We now have proof that high quality raters are doing characteristic engineering for Google engineers to coach their classifiers. You wish to create content material that high quality raters would rating as prime quality so your content material has a small affect over the following core replace.

Backside line

It’s shortsighted to say nothing ought to change. Based mostly on this info, I believe it’s time for us to rethink our greatest practices.

Let’s preserve what works and dump what’s not priceless. As a result of, I inform you what, there’s no text-to-code ratio in these paperwork, however a number of of your search engine optimization instruments will inform you your web site is falling aside due to it.

Lots of people have requested me how can we restore our relationship with Google transferring ahead.

I would like that we get again to a extra productive house to enhance the net. In any case, we’re aligned in our objectives of creating search higher.

I don’t know that I’ve a whole resolution, however I believe an apology and proudly owning their position in misdirection could be an excellent begin. I’ve a number of different concepts that we should always take into account.

- Develop working relationships with us: On the promoting facet, Google wines and dines its shoppers. I perceive that they don’t wish to present any type of favoritism on the natural facet, however Google must be higher about growing precise relationships with the search engine optimization neighborhood. Maybe a structured program with OKRs that’s just like how different platforms deal with their influencers is sensible. Proper now issues are fairly advert hoc the place sure folks get invited to occasions like I/O or to secret assembly rooms in the course of the (now-defunct) Google Dance.

- Carry again the annual Google Dance: Rent Lily Ray to DJ and make it about celebrating annual OKRs that we have now achieved by way of our partnership.

- Work collectively on extra content material: The bidirectional relationships that individuals like Martin Splitt have cultivated by way of his varied video sequence are robust contributions the place Google and the search engine optimization neighborhood have come collectively to make issues higher. We want extra of that.

- We wish to hear from the engineers extra. I’ve gotten probably the most worth out of listening to immediately from search engineers. Paul Haahr’s presentation at SMX West 2016 lives rent-free in my head and I nonetheless refer again to movies from the 2019 Search Central Dwell Convention in Mountain View commonly. I believe we’d all profit from listening to immediately from the supply.

All people sustain the great work

I’ve seen some implausible issues come out of the search engine optimization neighborhood up to now 48 hours.

I’m energized by the fervor with which everybody has consumed this materials and supplied their takes – even once I don’t agree with them. Any such discourse is wholesome and what makes our business particular.

I encourage everybody to maintain going. We’ve been coaching our complete careers for this second.

Opinions expressed on this article are these of the visitor creator and never essentially Search Engine Land. Employees authors are listed right here.