In our final article, A How-To Information on Buying AI Programs, we defined why the IEEE P3119 Commonplace for the Procurement of Synthetic Intelligence (AI) and Automated Determination Programs (ADS) is required.

On this article, we give additional particulars concerning the draft commonplace and the usage of regulatory “sandboxes” to check the growing commonplace in opposition to real-world AI procurement use instances.

Strengthening AI procurement practices

The IEEE P3119 draft commonplace is designed to assist strengthen AI procurement approaches, utilizing due diligence to make sure that companies are critically evaluating the AI providers and instruments they purchase. The usual may give authorities companies a technique to make sure transparency from AI distributors about related dangers.

The usual will not be meant to exchange conventional procurement processes, however quite to optimize established practices. IEEE P3119’s risk-based-approach to AI procurement follows the final rules in IEEE’s Ethically Aligned Design treatise, which prioritizes human well-being.

The draft steering is written in accessible language and consists of sensible instruments and rubrics. For instance, it features a scoring information to assist analyze the claims distributors make about their AI options.

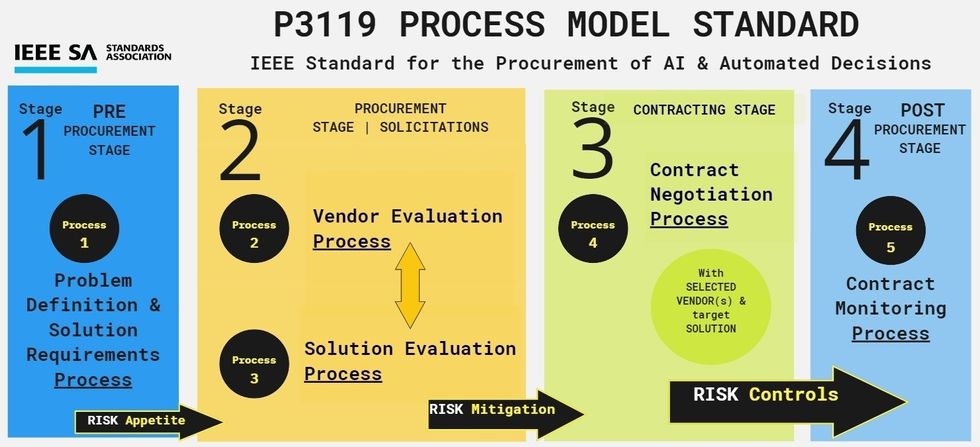

The IEEE P3119 commonplace consists of 5 processes that can assist customers establish, mitigate, and monitor harms generally related to high-risk AI techniques such because the automated choice techniques present in training, well being, employment, and plenty of public sector areas.

An summary of the usual’s 5 processes is depicted under.

Gisele Waters

Gisele Waters

Steps for outlining issues and enterprise wants

The 5 processes are 1) defining the issue and resolution necessities, 2) evaluating distributors, 3) evaluating options, 4) negotiating contracts, and 5) monitoring contracts. These happen throughout 4 phases: pre-procurement, procurement, contracting, and post-procurement. The processes can be built-in into what already occurs in standard international procurement cycles.

Whereas the working group was growing the usual, it found that conventional procurement approaches usually skip a pre-procurement stage of defining the issue or enterprise want. Right now, AI distributors provide options searching for issues as an alternative of addressing issues that want options. That’s why the working group created instruments to help companies with defining an issue and to evaluate the group’s urge for food for threat. These instruments assist companies proactively plan procurements and description applicable resolution necessities.

Through the stage during which bids are solicited from distributors (usually referred to as the “request for proposals” or “invitation to tender” stage), the seller analysis and resolution analysis processes work in tandem to offer a deeper evaluation. The seller’s organizational AI governance practices and insurance policies are assessed and scored, as are their options. With the usual, consumers can be required to get strong disclosure concerning the goal AI techniques to raised perceive what’s being bought. These AI transparency necessities are lacking in current procurement practices.

The contracting stage addresses gaps in current software program and knowledge expertise contract templates, that are not adequately evaluating the nuances and dangers of AI techniques. The usual gives reference contract language impressed by Amsterdam’s Contractual Phrases for Algorithms, the European mannequin contractual clauses, and clauses issued by the Society for Computer systems and Regulation AI Group.

“The working group created instruments to help companies with defining an issue and to evaluate the group’s urge for food for threat. These instruments assist companies proactively plan procurements and description applicable resolution necessities.”

Suppliers will be capable of assist management for the dangers they recognized within the earlier processes by aligning them with curated clauses of their contracts. This reference contract language could be indispensable to companies negotiating with AI distributors. When technical data of the product being procured is extraordinarily restricted, having curated clauses can assist companies negotiate with AI distributors and advocate to shield the general public curiosity.

The post-procurement stage includes monitoring for the recognized dangers, in addition to phrases and circumstances embedded into the contract. Key efficiency indicators and metrics are additionally constantly assessed.

The 5 processes provide a risk-based method that almost all companies can apply throughout quite a lot of AI procurement use instances.

Sandboxes discover innovation and current processes

Prematurely of the market deployment of AI techniques, sandboxes are alternatives to discover and consider current processes for the procurement of AI options.

Sandboxes are generally utilized in software program growth. They’re remoted environments the place new ideas and simulations could be examined. Harvard’s AI Sandbox, for instance, permits college researchers to check safety and privateness dangers in generative AI.

Regulatory sandboxes are real-life testing environments for applied sciences and procedures that aren’t but totally compliant with current legal guidelines and laws. They’re usually enabled over a restricted time interval in a “protected area” the place authorized constraints are sometimes “lowered” and agile exploration of innovation can happen. Regulatory sandboxes can contribute to evidence-based lawmaking and may present suggestions that permits companies to establish potential challenges to new legal guidelines, requirements and applied sciences.

We sought a regulatory sandbox to check our assumptions and the parts of the growing commonplace, aiming to discover how the usual would fare on real-world AI use instances.

Searching for sandbox companions final 12 months, we engaged with 12 authorities companies representing native, regional, and transnational jurisdictions. The companies all expressed curiosity in accountable AI procurement. Collectively, we advocated for a sandbox “proof of idea” collaboration during which the IEEE Requirements Affiliation, IEEE P3119 working group members, and our companions may check the usual’s steering and instruments in opposition to a retrospective or future AI procurement use case. Throughout a number of months of conferences we’ve got realized which companies have personnel with each the authority and the bandwidth wanted to accomplice with us.

Two entities specifically have proven promise as potential sandbox companions: an company representing the European Union and a consortium of native authorities councils in the UK.

Our aspiration is to make use of a sandbox to evaluate the variations between present AI procurement procedures and what may be if the draft commonplace adapts the established order. For mutual achieve, the sandbox would check for strengths and weaknesses in each current procurement practices and our IEEE P3119 drafted parts.

After conversations with authorities companies, we confronted the fact {that a} sandbox collaboration requires prolonged authorizations and concerns for IEEE and the federal government entity. The European company for example navigates compliance with the EU AI Act, Basic Information Safety Regulation, and its personal acquisition regimes whereas managing procurement processes. Likewise, the U.Okay. councils deliver necessities from their multi-layered regulatory atmosphere.

These necessities, whereas not shocking, needs to be acknowledged as substantial technical and political challenges to getting sandboxes accredited. The position of regulatory sandboxes, particularly for AI-enabled public providers in high-risk domains, is important to informing innovation in procurement practices.

A regulatory sandbox can assist us be taught whether or not a voluntary consensus-based commonplace could make a distinction within the procurement of AI options. Testing the usual in collaboration with sandbox companions would give it a greater likelihood of profitable adoption. We look ahead to persevering with our discussions and engagements with our potential companions.

The accredited IEEE 3119 commonplace is anticipated to be revealed early subsequent 12 months and presumably earlier than the top of this 12 months.

From Your Web site Articles

Associated Articles Across the Net